Creating a Prompt tool

Prompt tools shape and guide AI agents on how to respond to users during a conversation and the exact format for the response. They can also help agents perform complex language tasks, such as determining the sentiment of customer feedback.

Prompt tools provide examples of natural language text inputs from the user and the format for the response. The LLM uses few-shot learning methods through the examples to determine how to respond.

Use cases

Use cases for the Prompt tool:

- Transform unstructured text into JSON format

- Receive customer feedback text and determine the sentiment behind the feedback

- Define a specific, structured format and tone for a report or summarization

You can use Prompt tools with an integration tool. For example, the Prompt tool helps the agent determine if the customer feedback sentiment is negative or positive. If the feedback is negative, the agent calls an integration tool to generate a support case for the customer.

Prerequisites

To create, edit, and deploy agents and tools, you must have one of the following roles:

- Agent Garden Administrator

- Agent Garden Developer

- A custom role with privileges (Agent Garden Access, Agent Create, Agent Edit, Agent Feedback Submit, Agent Garden Feedback View)

Read Agent Garden: Getting Started for details on adding roles and setting up custom privileges.

Create a Prompt tool

Follow these steps to create a Prompt tool.

- Navigate to Agent Garden > Tools and click Create New Tool.

- Select Prompt.

- Click Add Tool.

- Enter a tool name. The character limit is 32.

- Enter a description of what the tool does. This description helps the Large Language Model(LLM) understand what the tool does. The character limit is 150.

- Optional: Click Add Input Parameters to add text the user will give the agent in a conversation to perform a task. For example, the user will give customer feedback text in string format. You can add up to 5 input parameters.

- Enter a name to identify the input parameter.

- Enter a description for the input.

- Select the data type for the value of the input parameter.

- Optional: Turn on the Required? toggle to require the agent to receive the input parameter from the user before it can continue.

- Click Add Input Parameter.

- Click Save & Continue.

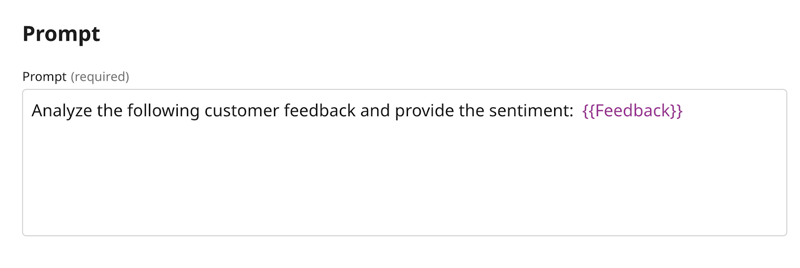

- In the Prompt field, add instructions to tell the LLM what you want it to do and how you want it to respond. Enter a curly brace to select and insert an input parameter in the prompt. Write instructions clearly so the LLM can understand. For example you can use a colon and the input value to state what the LLM must analyze. The input value is supplied after giving the LLM instructions on what to do with it.

- Click Save and Continue.

- Optional but recommended: Click Add Example. Examples show the LLM how to respond when receiving the input using few-shot learning methods. You can add up to 20 examples.

- Add an input that shows a typical input from the user.

- Add an output that shows how the agent should respond.

Here are examples of a Prompt tool that analyzes the sentiment of restaurant feedback:

- Click Save & Continue.

- Review the Prompt tool and click Deploy to activate it and add it to agents. Deployed tools show as active in the Tools list view and are available in the Tools tab to attach to an instruction when you create an agent.

Next steps:

- Create another tool. Agents can use multiple tools to complete tasks.

- Build an agent