Writing tasks and instructions

Understanding how the Large Language Model (LLM) uses tasks, instructions, and tools to achieve the agent goal is key to delivering effective and useful AI agents. Using best practices when designing tasks and instructions will reduce troubleshooting time and agent response errors. Refer to Building and agent and Testing and Troubleshooting an agent to learn more.

The Agent Designer employs a framework that selects the most suitable model for a given function, such as reasoning, summarization, and guardrails. It uses LLMs via Amazon Bedrock's managed service.

Key agent components

| Component | Description |

|---|---|

| Goal | The agent goal is a high-level statement that defines the agent’s overall purpose/objective. |

| Personality | Personality settings control the agent’s voice and tone as well as the response and reasoning style. |

| Tasks | Tasks are functional units of work or specific actions the agent performs to achieve its main goal. A task can have one or more tools attached to it. For example, “Fetch pending orders.” |

| Instructions | Instructions are natural language prompts that guide the Large Language Model (LLM) to achieve a task. It influences how the agent interprets user inputs, how the agent responds, and how the agent behaves when performing a task. Instructions can support conditional logic (“if the user does this, do this”) and can prevent unwanted behaviors and responses (“do not do this”). For example, “If the user cannot provide an order ID, offer to search orders by first and last name.” |

| Tools | Tools are functions that extend an agent’s capabilities and help it achieve results related to a task. For example, an agent could use an API tool to call an API endpoint and retrieve an order status. Tools can be shared across tasks and can be reused for multiple tasks and instructions. |

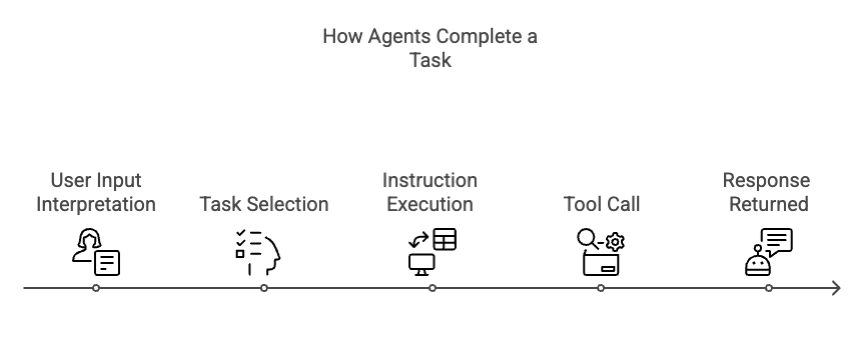

How agents complete a task

The LLM's reasoning determines how and when to initiate a task, execute instructions, and utilize tools. Tools can be shared across tasks. You can attach the same tool to multiple tasks and instructions. The LLM retains information about user inputs and tool responses and can use that data to execute additional instructions.

Step 1 - User Input Interpretation: The LLM interprets the user’s prompt in the conversational interface.

Step 2 - Task Selection: Based on its intent recognition, it selects the appropriate task from the list of tasks.

Step 3 - Instruction Execution: The LLM executes the instructions within the task. The task execution may involve the LLM asking the user for more information, such as an input parameter. The instructions are not necessarily executed in order from top to bottom but rather based on LLM reasoning. Based on conditions, such as user inputs, the LLM may determine to skip specific instructions not needed to complete the task.

Step 4 - Tool Call: If the task requires external data or actions, the LLM calls the associated tool. The LLM handles the tool’s response and integrates it into the conversation flow. The LLM retains information about tool responses and user inputs and can use that data to execute additional instructions.

The LLM can also loop a tool call when it is instructed and use the same tool multiple times when completing a task. For example, the agent can call the same API endpoint three different times, using different input parameters from the tool response or from the user for each call until it completes the task.

Task best practices

A task is a modular objective that tells the agent what to do. It contains a single function that guides the agent's reasoning and actions. Tasks connect user intent with applicable instructions and tools. These best practices can help you create effective tasks.

-

Break down the goal: Review your agent goal and break the goal down into small, clear, defined tasks.

-

Be specific: Ensure each task has a specific, well-defined purpose. Tasks with discrete intent improve agent accuracy, traceability, and user experience.

-

Avoid ambiguity: Create task descriptions that are clear and explain the outcome of the task. Task descriptions are considered when the LLM ranks tasks and makes a task selection.

Task examples

Good example

Task name: Check order status

Description: Retrieve and display the status of a customer's order using their order number.

Why it's good: This task has a precise definition and one function only. It indicates that the agent will receive an input from the user and respond with one output (status).

Bad example

Task name: Handle orders

Description: Handle orders like changing an order, canceling orders, etc.

Why it's bad: This task is too vague. Handle can refer to multiple tasks, such as changing an order, canceling an order, checking status, etc. An ambiguous task could also lead to the need for a large amount of instructions unrelated to each other, increasing the risk for agent errors, increased latency, or irrelevant tool calls.

Good example

Task name: Get account owner

Description: Return the name and email of the sales rep assigned to a specific account.

Why it's good: This task has a precise definition and one function: to find who owns the account. It indicates that the agent will receive an input from the user (account name) and respond with one output (name and email). The action requires one tool call to the CRM.

Bad example

Task name: Manage CRM data

Description: Handle CRM-related tasks like finding account owners, updating deal stages, logging notes, etc.

Why it's bad: This task is too overloaded. It bundles unrelated workflows into one task. It does not provide enough clarity to help the LLM determine which action the user wants to take. It also increases the risk of the agent asking follow-up questions. An overloaded task could also lead to the need for a large amount of instructions unrelated to each other.

Instruction best practices

Writing good instructions is essential to building an agent that is intuitive and helpful. Instructions are the natural language blueprint or playbook for how a task gets done. After the LLM interprets user intent and selects a task, the agent refers to its instructions to determine how to proceed. Instructions tell the agent how to do a task and also indicate conditional steps and fallback logic.

The agent reads all the instructions associated with a task and uses its reasoning to decide when to execute an instruction. It decides based on what’s relevant to execute in the current context of the conversation, what’s already known from memory or past tool calls, and any conditional logic you’ve added to the instructions.

Instructions shape the interaction between the agent and the user. Instructions can indicate when to ask follow-up questions, how to manage unclear or messy user inputs, and when to confirm information with a user before proceeding

-

Add details - Write instructions that offer detailed guidance. Assume the agent will not infer your intent and meaning unless you add it to instructions.

-

Use natural language - Write instructions as if you were explaining to a colleague.

-

Anticipate user inputs - Write instructions to handle different scenarios of user inputs. For example, “If the user provides the latitude and longitude, call the Weather API tool. Otherwise, if the user provides the city, find that latitude and longitude for the given city and then call the Weather API tool using those parameters.”

-

Include tool triggers - Indicate when the agent should invoke the tool. Refer to tools by name. For example, “After you receive the order number from the user, call the Order Status tool.”

-

Include error handling - Indicate how the agent should handle scenarios where the tool fails.

-

Include details about visualizations and images - Indicate when you want the agent's response to include an image that is pulled from your data source or an image tool. AI agents can also render chart visualizations natively without needing tools or data sources. Agents can infer which type of chart is appropriate to showcase the data. However, if the agent needs to respond with a certain type of chart, for example a bar graph, be specific in the instruction that you expect a bar graph to display.

Instruction examples

Good example

Task name: Check order status

Instruction: If the user has not provided an order number, ask them for it. Once you have a valid order number, call the Get Order Status tool. Return the delivery status to the user. If the order cannot be found, apologize and suggest contacting support.

Why it's good: This example describes a logical flow from user input to action and response. It includes conditional logic with simple "If" "then" structure. Also, it mentions when the LLM should invoke the tool. It tells the agent what to do if the tool fails.

Bad example

Task name: Check order status

Instruction: Look up the customer's order. Use the tool when you can. Make sure they're happy.

Why it's bad: This example is too vague and does not include enough detail to guide the agent. It lacks any conditional logic to handle different scenarios and problems, such as when an order number is missing or when the tool fails. The instruction does not mention the name or the action the tool takes which makes it difficult for the agent to identify the right tool to use.