AI-Cataloging your agents

Using the AI-Catalog feature, you can upload your agent as a file instead of manually adding the details. The supported file types are PDF, DOCX, YML, XML, JPG and PNG upto 200 KB file size. You can add only one agent at a time.

Adding a new agent without a pre-existing custom account through the AI-Catalog feature creates a new custom account. However, in case of an existing account, for example, a Langchain provider uploading a new Langchain agent document automatically adds it to the Langchain provider.

Benefits of AI-Cataloging your agents:

- Rapid onboarding: Add new custom providers and agents instantly by uploading reference or configuration files.

- No manual setup: Automatically populates account and agent details, reducing manual effort and potential for errors.

- Broad file support: Accepts structured and semi-structured file types — PDF, DOCX, YML, XML, JPG, PNG (≤200 KB).

- Smart extraction: Detects and parses relevant fields such as provider metadata, agent details, tools, and relationships.

- Streamlined catalog expansion: Makes it easy to extend ACT to custom or non-native AI providers .

- Governed and traceable: Each upload is logged and creates an entry in the Activity log, ensuring visibility into all additions to the provider catalog. Failure/Errors are also audited.

- Consistent data capture: Ensures all custom provider entities follow a standardized format for display and management in the UI.

Expected requirements

The uploadable files need to ensure the following:

- The size of the file needs to be upto 200 KB.

- For a single provider, there should be a maximum of 10 agents with their details.

- For multi-providers, there should be a maximum of 10 providers and each provider should have only one agent each.

To add an agent using the AI-Catalog feature:

-

Navigate to Agentstudio > Agent Control Tower > Manage Providers > Custom Accounts.

-

Click +Add Agent.

-

In the Add Agent window that pops up, select AI Catalog.

-

Read and accept the Terms and Conditions that appear.

-

To add agent details, click Select File and choose the file from your local system. The file size must be a maximum of 200 KB.

-

Once you have selected your file, click Add.

InfoThe Add button is disabled while the file is being uploaded. You can add only one file at a time. Once you have added a file, selecting another will be greyed out. To add a different file, you must delete the last uploaded file.

-

Once the file is uploaded, click Add.

The +Add Agent button will be disabled while the uploaded agent is being added. The new agent will create a brand new Custom account. Once the process is complete, you can view the activity log. The AI-Catalog feature works on a file reading mechanism. Any file that does not meet the expected requirements or falls below the designated confidence score of 60 will be flagged and information for this error can be viewed in the activity log.

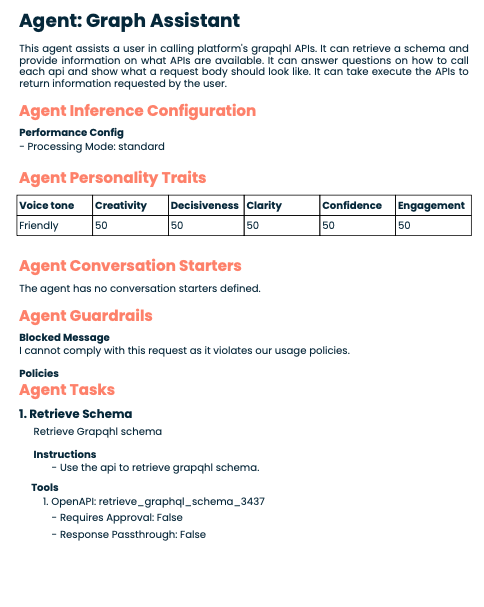

Examples of accepted input files

YML file

metadata:

exported_at: '2025-08-13T05:46:28Z'

schema_version: '1.0'

agents:

- objective: Recommend relevant training resources from Boomi training dataset to users

based on their skill or interest.

name: LearnBuddy

personality_traits:

voice_tone: Instructional

creativity: 60

decisiveness: 70

clarity: 90

confidence: 80

engagement: 75

profile_picture:

role: Assistant

colour: colour_white

image_id: img_location

conversation_starters:

- What skill are you looking to improve today?

- Are you aiming for a Boomi certification?

- Curious what your next learning step should be?

- Tell me your role or interests—I’ll recommend courses!

- Can I help you schedule a Gmeet?

tasks:

- name: Recommend Training Based on Role or Interest

objective: Use the JSON file from the Boomi S3 tool to suggest training courses relevant

to the user's stated skill, goal, or role.

instructions:

- Use the Boomi S3 JSON tool to fetch training data.

- Parse the JSON response to access course titles, categories, and descriptions.

- Filter or rank the course list based on relevance to the user’s input.

- Return top 3–5 relevant courses with short summaries.

- Offer a follow-up to explore more or get certification guidance.

- Avoid suggesting duplicate or highly similar courses unless requested.

- If no courses match, suggest categories and ask for preferences again.

- Respond clearly and helpfully, avoid jargon unless user is advanced.

- Keep the response length reasonable; offer to expand if needed.

tools:

- name: boomi_trainings

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: s3jsonreader_1343

- name: Fetch Company Openings and Recruiters

objective: This task retrieves a JSON file from a secure S3 bucket or endpoint containing

the latest internal job openings and recruiter assignments across the company. It

will parse and expose this information for downstream tasks like recommendation,

filtering, or internal mobility agents.

instructions:

- Parse the JSON data into structured fields such as Req ID, Job Name, Grade, Hiring

Managers, Location, Openings, Recruiter, Department

- Ask the user for their role and openings (e.g., Integration Developer, Architect)

or interest (e.g., APIs, EDI).

- Use the Boomi S3 JSON tool to fetch training data.

- Filter or rank the course list based on relevance to the user’s input.

tools:

- name: FetchOpenings

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: s3jsonreader_copy_1390

- name: Confluence page trainings

objective: Main task is to fetch the confluence pages and summarize the trainings

required specific to different teams.

instructions:

- Use the tool to connect to confluence

- List the teams whose set of trainings available on the conflunce page - when asked

- Use the links of the confluence pages to further look into each team's trainings

and recommend them to the user when asked with the team name

- Convert the names of the role or the team as role and use them with search confluence

pages tool

tools:

- name: Search Confluence Training Pages

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: jiraboardfetcher_1116

- name: post timetable in slack

objective: post timetable in slack

instructions:

- 'Ask the user: What would you like to learn about or achieve?'

- 'Ask the user for the slack ID '

- "If either query or slackId is missing, show an error message: ‘Please specify

what you want to learn and make sure you're connected via Slack."

- Once both query and slackId are available, send them to the SlackConnect tool

via POST request.”

- Convert the names of the courses as the titles and use them with slack connect

tool.

- Convert the duration to duration and use them with slack connect tool.

- 'Convert the links to link and use them with slack connect tool. '

- Ask the user if they need help finding the slack ID and use the prompt tool to

help them

tools:

- name: SlackConnect

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: confluncepagematcher_copy_1456

- name: formatCoursesAsJson

type: Prompt

requires_approval: false

response_passthrough: false

unique_name: formatcoursesasjson_1462

- name: Find Slack ID

type: Prompt

requires_approval: false

response_passthrough: false

unique_name: find_slack_id_1621

- name: Send mails

objective: Send mails given to, body, from, subject from process

instructions:

- It should be able to send mails

tools:

- name: send mails

type: Integration

requires_approval: false

response_passthrough: false

unique_name: send_mails_1465

- name: Schedule a meet

objective: Schedule a google meeting on Google calendar according to the user input

without fail

instructions:

- Create a calendar invite for a google meet using the user's input. Do this without

fail.

tools:

- name: Calendar - trial

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: calendar_1602

guardrails:

custom_variables:

inference_configuration:

performance_config:

processing_mode: standard

unique_name: learnbuddy_1897

tools:

OpenAPI:

# --------------------------------------------------------

# OpenAPI Tool: s3jsonreader_1343

# IMPLEMENTATION REQUIREMENTS:

# - No modifications are required to import this tool.

# --------------------------------------------------------

# --------------------------------------------------------

# OpenAPI Tool: s3jsonreader_copy_1390

# IMPLEMENTATION REQUIREMENTS:

# - No modifications are required to import this tool.

# --------------------------------------------------------

# --------------------------------------------------------

# OpenAPI Tool: jiraboardfetcher_1116

# IMPLEMENTATION REQUIREMENTS:

# 2. Required headers:

# - Content-Type

# --------------------------------------------------------

# --------------------------------------------------------

# OpenAPI Tool: confluncepagematcher_copy_1456

# IMPLEMENTATION REQUIREMENTS:

# 2. Required headers:

# - Content-Type

# --------------------------------------------------------

# --------------------------------------------------------

# OpenAPI Tool: calendar_1602

# IMPLEMENTATION REQUIREMENTS:

# 1. Authentication: Token is required

# --------------------------------------------------------

- name: boomi_trainings

description: List of boomi trainings available

input_parameters: []

base_url: https://ai-hackathon-learnbuddy.vercel.app

path: /api/courses

method: GET

query_parameters: []

path_parameters: []

headers: []

unique_name: s3jsonreader_1343

- name: FetchOpenings

description: Fetch the details of the recruiters

input_parameters: []

base_url: https://ai-hackathon-learnbuddy.vercel.app

path: /api/recruitments

method: GET

query_parameters: []

path_parameters: []

headers: []

unique_name: s3jsonreader_copy_1390

- name: Search Confluence Training Pages

description: "Returns Confluence pages related to training based on a page name\n"

input_parameters:

- name: role

description: "the role or the Team name to search for training\n"

required: true

type: string

base_url: https://iui971vu8c.execute-api.us-east-1.amazonaws.com

path: /default/JiraBoardFuzzyMatcher

method: POST

query_parameters: []

path_parameters: []

headers:

- name: Content-Type

static_value: ''

request_body:

type: application/json

template: "{\n \"role\": \"{{role}}\"\n} "

unique_name: jiraboardfetcher_1116

- name: SlackConnect

description: Sends user queries to the scripts for training and resource recommendations.

input_parameters:

- name: modules

description: modules to be sent

required: true

type: string

- name: slackID

description: user's slack ID

required: true

type: string

base_url: https://learn-buddy-phi.vercel.app

path: /api/slackConnect

method: POST

query_parameters: []

path_parameters: []

headers:

- name: Content-Type

static_value: ''

request_body:

type: application/json

template: "{\r\n \"slackId\": \"{{slackID}}\",\r\n \"modules\": {{modules}}\r\n\

}\r\n"

unique_name: confluncepagematcher_copy_1456

- name: Calendar - trial

description: Schedules a meet on the calendar

input_parameters:

- name: dateTimeStart

description: Date time to set the meeting

required: false

type: string

- name: 'email '

description: Email to schedule the meet

required: false

type: string

- name: dateTimeEnd

description: End time for meet

required: false

type: string

- name: meetTitle

description: Title of the meet should be the course being taken by the user

required: false

type: string

base_url: https://www.googleapis.com

path: /calendar/v3/calendars/primary/events?conferenceDataVersion=1

method: POST

query_parameters: []

path_parameters: []

headers: []

authentication:

type: token_auth

config:

key: Authorization

value: ''

request_body:

type: application/json

template: "{\n \"summary\": \"{{meetTitle}}\",\n \"description\": \"\",\n \"start\"\

: {\n \"dateTime\": \"{{dateTimeStart}}\",\n \"timeZone\": \"Asia/Kolkata\"\

\n },\n \"end\": {\n \"dateTime\": \"{{dateTimeEnd}}\",\n \"timeZone\":

\"Asia/Kolkata\"\n },\n \"attendees\": [\n {\"email\": \"{{email }}\"}\n ],\n\

\ \"conferenceData\": {\n \"createRequest\": {\n \"requestId\": \"random-string-12345234556\"\

\n }\n }\n} "

unique_name: calendar_1602

Prompt:

# --------------------------------------------------------

# Prompt Tool: formatcoursesasjson_1462

# IMPLEMENTATION REQUIREMENTS:

# - No modifications are required to import this tool.

# --------------------------------------------------------

# --------------------------------------------------------

# Prompt Tool: find_slack_id_1621

# IMPLEMENTATION REQUIREMENTS:

# - No modifications are required to import this tool.

# --------------------------------------------------------

- name: formatCoursesAsJson

description: Converts a block of text listing course names and durations into a structured

JSON array of course modules for use in Slack learning plans.

input_parameters:

- name: text

description: Free-form course descriptions, e.g. "Integration Essentials (3 hr 5

min)"

required: false

type: integer

prompt: "From the text below, extract a JSON array of course modules.\r\n\r\nEach module

should have:\r\n- \"title\": the course name\r\n- \"duration\": the time in the format

such as \"3 hr 5 min\", \"15 min\", etc.\r\n\r\nOnly return the JSON array — no explanation.\r\

\n\r\nText:\r\n{{text}}"

examples:

- input: "{\n \"text\": \"Integration Essentials (3 hr 5 min)\\nAssociate Integration

Developer (5 hr 15 min)\\nProfessional Integration Developer (7 hr 12 min)\\nWorking

with Dynamic Connections (15 min)\"\n}\n"

output: "[\n {\n \"title\": \"Integration Essentials\",\n \"duration\": \"\

3 hr 5 min\"\n },\n {\n \"title\": \"Associate Integration Developer\",\n\

\ \"duration\": \"5 hr 15 min\"\n },\n {\n \"title\": \"Professional Integration

Developer\",\n \"duration\": \"7 hr 12 min\"\n },\n {\n \"title\": \"

Working with Dynamic Connections\",\n \"duration\": \"15 min\"\n }\n]"

unique_name: formatcoursesasjson_1462

- name: Find Slack ID

description: Help the user find their member ID in slack

input_parameters: []

prompt: "Open Slack and go to your workspace.\n\nClick on your profile picture in the

top right corner.\n\nSelect View Profile from the dropdown menu.\n\nIn the profile

window, click on the More button (three dots).\n\nChoose Copy Member ID from the options.\n

\nYour Slack Member ID is now copied to your clipboard and ready to use.\n"

examples:

- input: Where can I find my slack ID

output: Return the prompt given

- input: How to find the member id in slack

output: Return the prompt given

unique_name: find_slack_id_1621

Integration:

# --------------------------------------------------------

# Integration Tool: send_mails_1465

# IMPLEMENTATION REQUIREMENTS:

# 1. Create a new Integration process named 'hey'

# 2. Configure this process to:

# - Accept a dynamic process property to

# - Accept a dynamic process property name

# 3. Set up the following dynamic process properties:

# - to: Maps to input parameter mail

# - name: Maps to input parameter name

# --------------------------------------------------------

- name: send mails

description: send mails

input_parameters:

- name: mail

description: receiver mail

required: true

type: string

- name: name

description: sender's name

required: true

type: string

dynamic_process_properties:

to:

input_parameter_name: mail

name:

input_parameter_name: name

unique_name: send_mails_1465

- objective: Get a list of all available datasets (packages) from an open data portal, useful

for data discovery, market research, or identifying potential new data sources for analysis.

name: Data Discovery

personality_traits:

voice_tone: Instructional

creativity: 80

decisiveness: 75

clarity: 90

confidence: 85

engagement: 90

profile_picture:

role: Data

colour: Seabreeze

image_id: img_location

conversation_starters:

- Can you help me find relevant datasets for my market research project about electric

cars?

- I'm looking to identify new data sources for my data analysis. Can you assist?

- What are the latest datasets available on the open data portal?

- How can I discover datasets that could be useful for my business?

tasks:

- name: Retrieve Dataset List

objective: Get a list of all available datasets (packages) from the open data portal

instructions:

- Use the CKAN API to retrieve the list of all available datasets (packages) from

the open data portal

- Return the list of datasets in a clear and organized format

- Use the rows and start parameters to control the number of results and pagination

tools:

- name: CKAN API

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: ckan_api_6011

- name: Provide Dataset Search

objective: Allow users to search and browse the available datasets

instructions:

- Use the CKAN API to search for datasets based on user input

- Present the search results in a user-friendly format, including relevant metadata

about each dataset

- Allow the user to navigate through the search results and view detailed information

about each dataset

tools:

- name: CKAN API

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: ckan_api_6011

- name: Dataset Output Formatter

type: Prompt

requires_approval: false

response_passthrough: false

unique_name: dataset_output_formatter_6012

- name: Provide Dataset Recommendations

objective: Offer recommendations for related or similar datasets based on the user's

search

instructions:

- Use the CKAN API to find datasets that are similar or related to the user's interests

- Present the recommended datasets to the user, along with relevant metadata and

explanations for the recommendations

tools:

- name: CKAN API

type: OpenAPI

requires_approval: false

response_passthrough: false

unique_name: ckan_api_6011

- name: Dataset Output Formatter

type: Prompt

requires_approval: false

response_passthrough: false

unique_name: dataset_output_formatter_6012

guardrails:

blocked_message: I cannot provide access to datasets that are restricted or contain

sensitive information.

system: false

policies:

- name: Restricted Datasets

type: denied_topic

configuration:

description: Prevent access to datasets that are restricted or contain sensitive

information.

sample_phrases:

- Can you get me the classified government data?

- I need access to the confidential financial records.

- How can I get the private health data for my research?

- name: Prohibited Terms Policy

type: word_filter

configuration:

words:

- illegal

- unethical

- harmful

agent_mode: conversational

custom_variables:

inference_configuration:

performance_config:

processing_mode: standard

agent_io_schema_version: 1

unique_name: data_discovery_6239

- name: CKAN API

description: Retrieve a list of all available datasets (packages) from the open data

portal

input_parameters:

- name: q

description: Search query (optional)

required: false

type: string

- name: rows

description: Number of results to return (default is 10, maximum is 1000)

required: false

type: integer

- name: start

description: Result offset for pagination (default is 0)

required: false

type: integer

base_url: https://catalog.data.gov/api/3/action

path: /package_search

method: GET

query_parameters:

- name: q

input_parameter_name: q

- name: rows

input_parameter_name: rows

- name: start

input_parameter_name: start

path_parameters: []

headers: []

unique_name: ckan_api_6011

- name: Dataset Output Formatter

description: Present the search results in a user-friendly format, including relevant

metadata about each dataset

input_parameters:

- name: dataset_info

description: JSON object containing dataset information

required: true

type: string

prompt: "Given the following dataset information:\n{{dataset_info}}\n\nPlease format

this information in a clear, user-friendly way. Include the following details if available:\n

1. Dataset Title\n2. Description\n3. Organization\n4. Last Updated Date\n5. Available

Formats\n6. Topics or Tags\n7. Any other relevant metadata\n\nPresent this information

in a structured, easy-to-read format.\n"

examples:

- input: "{\n \"title\": \"Electric Vehicle Population Data\",\n \"description\"\

: \"Current registry of Battery Electric Vehicles (BEVs) and Plug-in Hybrid Electric

Vehicles (PHEVs) in Washington State\",\n \"organization\": \"State of Washington\"\

,\n \"last_updated\": \"2025-07-19\",\n \"formats\": [\"CSV\", \"RDF\", \"JSON\"\

, \"XML\"],\n \"topics\": [\"Transportation\", \"Electric Vehicles\", \"Vehicle

Registration\"]\n}\n"

output: "Dataset: Electric Vehicle Population Data\n\nDescription: Current registry

of Battery Electric Vehicles (BEVs) and Plug-in Hybrid Electric Vehicles (PHEVs)

in Washington State\n\nOrganization: State of Washington\nLast Updated: July 19,

2025\nAvailable Formats: CSV, RDF, JSON, XML\nTopics: Transportation, Electric

Vehicles, Vehicle Registration\n\nThis dataset provides information about:\n-

Vehicle makes and models\n- Electric vehicle types (BEV/PHEV)\n- Vehicle registration

details\n\nThis dataset could be useful for researchers, policymakers, or businesses

interested in electric vehicle adoption trends in Washington State.\n"

unique_name: dataset_output_formatter_6012

XML file

<?xml version="1.0" encoding="UTF-8"?>

<providers>

<provider>

<name>Langchain</name>

<type>Langchain</type>

<agents>

<agent>

<objective>Analyze and optimize cloud infrastructure performance and cost efficiency</objective>

<name>Test Agent 1</name>

<tasks>

<task>

<name>Cost Analysis</name>

<objective>Analyze cloud spending patterns and identify optimization opportunities</objective>

<instructions>

<instruction>Gather current cloud usage metrics and billing data</instruction>

<instruction>Identify underutilized resources and recommend rightsizing</instruction>

<instruction>Calculate potential savings from reserved instances</instruction>

<instruction>Generate cost optimization report with actionable recommendations</instruction>

<instruction>Monitor spending trends and alert on anomalies</instruction>

</instructions>

<tools>

<tool>

<name>CloudCostAnalyzer</name>

<type>OpenAPI</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>cost_analyzer_001</unique_name>

<resources>

<resource>billing_api_endpoint</resource>

<resource>cost_metrics_database</resource>

</resources>

</tool>

<tool>

<name>ResourceOptimizer</name>

<type>Integration</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>resource_opt_001</unique_name>

<resources>

<resource>optimization_engine</resource>

<resource>recommendation_service</resource>

</resources>

</tool>

</tools>

</task>

</tasks>

<guardrails>

<guardrail>

<name>Cost Threshold Guard</name>

<type>financial</type>

<threshold>10000</threshold>

<action>alert_and_approve</action>

</guardrail>

<guardrail>

<name>Security Policy Guard</name>

<type>security</type>

<policy>strict_compliance</policy>

<action>block_and_notify</action>

</guardrail>

</guardrails>

<llm_models>

<llm_model>

<name>GPT-4-Turbo</name>

<provider>OpenAI</provider>

<version>2024-04-09</version>

</llm_model>

</llm_models>

<tags>

<tag>infrastructure</tag>

<tag>cloud</tag>

<tag>optimization</tag>

<tag>monitoring</tag>

</tags>

</agent>

<agent>

<objective>Automate software testing and ensure code quality</objective>

<name>Test Agent 2</name>

<tasks>

<task>

<name>Test Automation</name>

<objective>Build and maintain test suites</objective>

<instructions>

<instruction>Develop test cases</instruction>

<instruction>Integrate with CI/CD</instruction>

<instruction>Run tests automatically</instruction>

<instruction>Log defects and test results</instruction>

</instructions>

<tools>

<tool>

<name>TestFramework</name>

<type>Integration</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>test_framework_002</unique_name>

<resources>

<resource>selenium_grid</resource>

<resource>test_data_manager</resource>

</resources>

</tool>

</tools>

</task>

</tasks>

<guardrails>

<guardrail>

<name>Test Coverage Guard</name>

<type>quality</type>

<threshold>80</threshold>

<action>warning_and_report</action>

</guardrail>

</guardrails>

<llm_models>

<llm_model>

<name>GPT-4</name>

<provider>OpenAI</provider>

<version>2024-04-09</version>

</llm_model>

</llm_models>

<tags>

<tag>testing</tag>

<tag>automation</tag>

</tags>

</agent>

<agent>

<objective>Manage containerized workloads efficiently</objective>

<name>Test Agent 3</name>

<tasks>

<task>

<name>Container Management</name>

<objective>Orchestrate containers</objective>

<instructions>

<instruction>Deploy Kubernetes workloads</instruction>

<instruction>Monitor container performance</instruction>

<instruction>Update container images</instruction>

</instructions>

<tools>

<tool>

<name>ContainerOrchestrator</name>

<type>Integration</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>container_orch_003</unique_name>

<resources>

<resource>kubernetes_api</resource>

<resource>docker_registry</resource>

</resources>

</tool>

</tools>

</task>

</tasks>

<guardrails>

<guardrail>

<name>Deployment Guard</name>

<type>operational</type>

<action>require_approval</action>

</guardrail>

</guardrails>

<llm_models>

<llm_model>

<name>Claude-3-Sonnet</name>

<provider>Anthropic</provider>

<version>20240229</version>

</llm_model>

</llm_models>

<tags>

<tag>devops</tag>

<tag>containers</tag>

</tags>

</agent>

<agent>

<objective>Analyze business data and deliver insights</objective>

<name>Test Agent 4</name>

<tasks>

<task>

<name>Data Analysis</name>

<objective>Extract insights from data</objective>

<instructions>

<instruction>Ingest raw data</instruction>

<instruction>Clean and normalize</instruction>

<instruction>Create visual reports</instruction>

</instructions>

<tools>

<tool>

<name>DataProcessor</name>

<type>Integration</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>data_processor_004</unique_name>

<resources>

<resource>data_warehouse</resource>

</resources>

</tool>

</tools>

</task>

</tasks>

<guardrails>

<guardrail>

<name>Privacy Guard</name>

<type>privacy</type>

<action>anonymize</action>

</guardrail>

</guardrails>

<llm_models>

<llm_model>

<name>Gemini-Pro</name>

<provider>Google</provider>

<version>1.0</version>

</llm_model>

</llm_models>

<tags>

<tag>data</tag>

<tag>analytics</tag>

</tags>

</agent>

<agent>

<objective>Ensure security through vulnerability management</objective>

<name>Test Agent 5</name>

<tasks>

<task>

<name>Vulnerability Assessment</name>

<objective>Scan and fix system vulnerabilities</objective>

<instructions>

<instruction>Scan assets for CVEs</instruction>

<instruction>Classify and prioritize risks</instruction>

<instruction>Generate report for patching</instruction>

</instructions>

<tools>

<tool>

<name>VulnerabilityScanner</name>

<type>OpenAPI</type>

<requires_approval>false</requires_approval>

<response_passthrough>false</response_passthrough>

<unique_name>vuln_scanner_005</unique_name>

<resources>

<resource>vulnerability_database</resource>

</resources>

</tool>

</tools>

</task>

</tasks>

<guardrails>

<guardrail>

<name>Critical CVE Guard</name>

<type>security</type>

<severity>high</severity>

<action>immediate_escalation</action>

</guardrail>

</guardrails>

<llm_models>

<llm_model>

<name>GPT-2.0</name>

<provider>OpenAI</provider>

<version>2024-04-09</version>

</llm_model>

</llm_models>

<tags>

<tag>security</tag>

<tag>vulnerability</tag>

</tags>

</agent>

<!-- Agents 6 to 10 are short-form for brevity -->

<agent>

<objective>Improve search engine ranking through SEO strategies</objective>

<name>Test Agent 6</name>

<tasks>

<task>

<name>SEO Optimization</name>

<objective>Audit and optimize site SEO</objective>

<instructions>

<instruction>Analyze keywords</instruction>

<instruction>Optimize metadata</instruction>

<instruction>Generate report</instruction>

</instructions>

<tools />

</task>

</tasks>

<guardrails />

<llm_models />

<tags>

<tag>seo</tag>

</tags>

</agent>

<agent>

<objective>Translate product content into multiple languages</objective>

<name>Test Agent 7</name>

<tasks>

<task>

<name>Content Translation</name>

<objective>Translate marketing materials</objective>

<instructions>

<instruction>Use AI translation engine</instruction>

<instruction>Check for cultural nuances</instruction>

</instructions>

<tools />

</task>

</tasks>

<guardrails />

<llm_models />

<tags>

<tag>localization</tag>

</tags>

</agent>

<agent>

<objective>Monitor system health and alert on anomalies</objective>

<name>Test Agent 8</name>

<tasks>

<task>

<name>System Monitoring</name>

<objective>Track uptime and errors</objective>

<instructions>

<instruction>Monitor CPU/memory</instruction>

<instruction>Send alerts</instruction>

</instructions>

<tools />

</task>

</tasks>

<guardrails />

<llm_models />

<tags>

<tag>monitoring</tag>

</tags>

</agent>

<agent>

<objective>Create blog posts and marketing content</objective>

<name>Test Agent 9</name>

<tasks>

<task>

<name>Content Creation</name>

<objective>Generate written content</objective>

<instructions>

<instruction>Use GPT model</instruction>

<instruction>Optimize for tone and clarity</instruction>

</instructions>

<tools />

</task>

</tasks>

<guardrails />

<llm_models />

<tags>

<tag>content</tag>

</tags>

</agent>

<agent>

<objective>Automate customer support tasks</objective>

<name>Test Agent 10</name>

<tasks>

<task>

<name>Customer Response</name>

<objective>Handle inquiries via chatbot</objective>

<instructions>

<instruction>Use FAQ database</instruction>

<instruction>Escalate when needed</instruction>

</instructions>

<tools />

</task>

</tasks>

<guardrails />

<llm_models />

<tags>

<tag>support</tag>

</tags>

</agent>

</agents>

</provider>

</providers>

DOCX file

AI Agent Specifications and Configurations This document contains various AI agent specifications, configurations, and examples for testing the agent extraction system.

- LangChain Customer Service Agent Agent Name: Customer Support Assistant Framework: LangChain Purpose: Handle customer inquiries, provide product information, and escalate complex issues to human agents. Model: GPT-4 Capabilities: Natural language processing, knowledge base search, ticket creation, sentiment analysis Tools: Zendesk API, Product Database, FAQ System, Email Integration Knowledge Sources: Product documentation, FAQ database, previous support tickets, company policies

- CrewAI Research Assistant Agent Name: Research Analyst Framework: CrewAI Purpose: Conduct comprehensive research, analyze data, and generate detailed reports on various topics. Model: Claude-3-Sonnet Capabilities: Web scraping, data analysis, report generation, citation management Tools: Web Search API, Data Analysis Libraries, Report Templates, Citation Database Knowledge Sources: Academic databases, news sources, research papers, industry reports

- Salesforce AgentForce Sales Agent Agent Name: Sales Assistant Framework: Salesforce AgentForce Purpose: Qualify leads, schedule meetings, update CRM records, and provide sales support. Model: Einstein GPT Capabilities: Lead qualification, appointment scheduling, CRM updates, sales analytics Tools: Salesforce CRM, Calendar Integration, Email Automation, Lead Scoring Knowledge Sources: Salesforce data, customer profiles, product catalogs, sales playbooks

- ServiceNow AI IT Support Agent Agent Name: IT Support Specialist Framework: ServiceNow AI Purpose: Handle IT support tickets, troubleshoot technical issues, and provide self-service solutions. Model: ServiceNow AI Model Capabilities: Ticket management, technical troubleshooting, knowledge base search, automation Tools: ServiceNow Platform, Knowledge Base, Automation Engine, Integration Hub Knowledge Sources: IT knowledge base, previous tickets, system documentation, troubleshooting guides

- LlamaIndex Document Analysis Agent Agent Name: Document Analyzer Framework: LlamaIndex Purpose: Analyze and extract insights from large document collections, provide document summaries and Q&A. Model: Llama-2-70B Capabilities: Document indexing, semantic search, question answering, summarization Tools: Vector Database, Document Parser, Search Engine, Summary Generator Knowledge Sources: Document repository, PDF files, Word documents, web pages, databases

- AutoGPT Task Automation Agent Agent Name: Task Automator Framework: AutoGPT Purpose: Automate repetitive tasks, manage workflows, and execute multi-step processes autonomously. Model: GPT-4 Capabilities: Task planning, web automation, file management, API integration Tools: Web Browser, File System, API Clients, Task Scheduler Knowledge Sources: Task definitions, workflow templates, API documentation, user preferences

- Workday AI HR Assistant Agent Name: HR Assistant Framework: Workday AI Purpose: Handle HR inquiries, process employee requests, manage benefits, and provide HR policy guidance. Model: Workday AI Model Capabilities: Employee self-service, benefits management, policy guidance, reporting Tools: Workday HCM, Benefits Portal, Policy Database, Reporting Tools Knowledge Sources: HR policies, benefits information, employee data, compliance guidelines Summary This document contains examples of various AI agent frameworks and configurations that can be used to test the agent extraction system. Each agent has different capabilities, tools, and knowledge sources that should be properly identified and extracted.

PNG file