Creating a Data Quality Report agent

The Data Quality Report agent streamlines the analysis of incoming quarantined entities. The data steward can review the report's high-level observations and take action faster.

You'll use the following services in the Boomi Platform to build an AI agent:

Agentstudio

Integration

DataHub

Prerequisites

info

Your account must have a Boomi public cloud instance to use the Agent step.

Agent overview

You can add artificial intelligence to your existing data stewardship business process using the Agent step.

In this example, we'll build an AI agent that:

- Takes quarantine query data from DataHub

- Analyzes quarantines for common patterns and insights

- Generates a report listing high, medium, low-risk data quality issues, potential match rule problems, and flags issues for review.

We'll build an integration that:

- Queries the DataHub connector for quarantine entities

- Sends data to the agent for report generation

- Sends the report to the data steward's email.

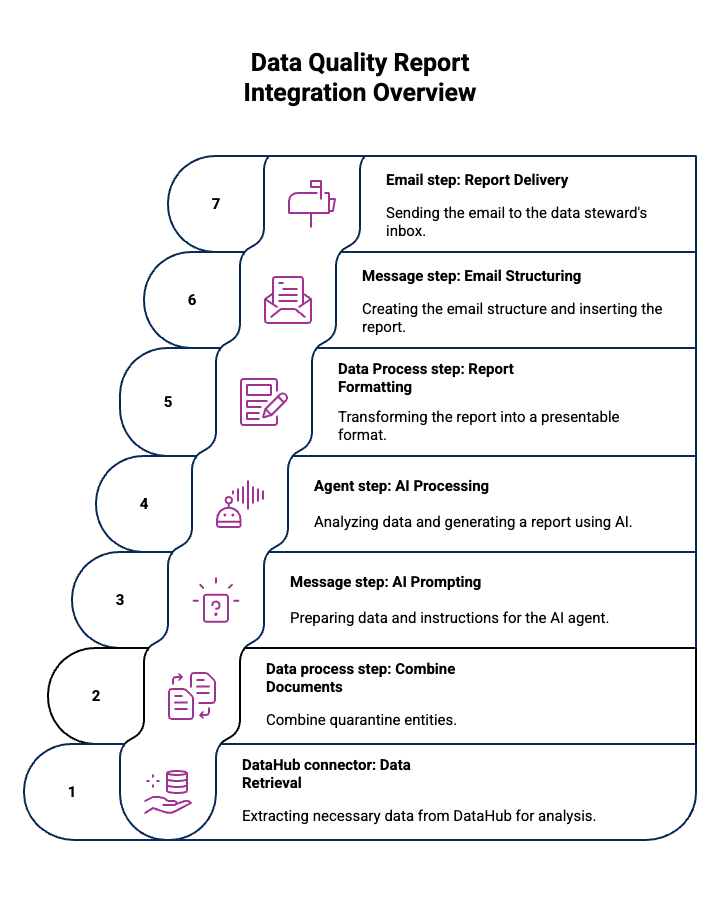

How the integration works

- Boomi DataHub connector queries DataHub for quarantine data.

- Data Process step combines all the quarantine entries into a single document for the agent to analyze.

- Message step prompts the AI agent, giving it quarantine data and asking for a report.

- Agent step processes the request using the AI agent and responds using the Agent step.

- Data Process step processes the response into a format suitable for email using a custom script.

- Message step formats the email's structure.

- Email step: sends the report to the data steward's email inbox.

Step 1: Create and deploy the report agent

- Navigate to Agentstudio > Agent Garden > Agents.

- Select Create New Agent.

- Do one of the following:

- Select Blank Template > Build with AI and create your version of the agent that you customize to your needs. For example, you can add a Prompt tool to specify an exact layout for the report.

- Recommended: Select Import Agent to import the Data Quality report agent using a YAML file. This imports all the settings for the agent, including personality, temperature, tasks, instructions, and guardrails. You can edit as needed to fit your business requirements. Refer to Importing an agent. Here is the YAML configuration to create the YAML file:

Agent YAML for import

metadata:

exported_at: '2025-07-25T20:25:53Z'

schema_version: '1.0'

agents:

- objective: Analyze quarantine data from DataHub, generate comprehensive data quality summary

reports, and provide actionable recommendations for data stewards

name: DataQualityGuardian

personality_traits:

voice_tone: Professional

creativity: 75

decisiveness: 90

clarity: 95

confidence: 85

engagement: 80

profile_picture:

role: Data Quality Analyst

colour: colour_blue

image_id: img_location

conversation_starters:

- Can you analyze the quarantine data from DataHub and generate a quality report?

- Generate a summary report of our data quality metrics

tasks:

- name: Load Quarantine Data

objective: Retrieve and validate quarantine data from DataHub

instructions:

- Request user to provide specific quarantine dataset details

- Verify data source and connection to DataHub

- Validate data file format and completeness

tools: []

- name: Perform Data Quality Assessment

objective: Conduct comprehensive analysis of data quality metrics

instructions:

- Analyze dataset for completeness, accuracy, consistency, and uniqueness

- Identify and categorize different types of data issues

- Calculate percentage of problematic records

- Assess risk levels for each identified data issue

tools: []

- name: Generate Quality Report

objective: Create detailed data quality summary report

instructions:

- Compile findings from data quality assessment

- Create a structured report with data quality metrics

- Include visual representations of data issues

- Provide risk assessment for each identified problem

tools: []

- name: Develop Remediation Recommendations

objective: Provide actionable recommendations for data stewards

instructions:

- Prioritize data issues based on risk and impact

- Suggest specific corrective actions for each identified problem

- Recommend data cleansing and validation strategies

- Provide guidelines for preventing future data quality issues

tools: []

guardrails:

blocked_message: I can only create a data quality summary report and provide data steward

recommendations.

system: false

policies:

- name: Legal advice

type: denied_topic

configuration:

description: 'providing information about how to take legal action, contract recommendations,

and providing advice about suing people. '

sample_phrases:

- Can you provide specific legal advice for my situation?

- Can you draft a contract that will help me win my case?

- What legal steps should I take to win a lawsuit?

- name: business decision-making

type: denied_topic

configuration:

description: suggestions and recommendations about making business decisions including

financial strategy, security strategy, and data policies.

sample_phrases:

- Can you make strategic decisions for our company's merger?

- Help me draft a detailed business acquisition plan

- How can I bypass data protection regulations to gain a competitive advantage?

- What's the most aggressive method to undermine our competitors' business?

- name: Regex Pattern Validation_0

type: regex_pattern

configuration:

pattern: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$

- name: Regex Pattern Validation_1

type: regex_pattern

configuration:

pattern: ^\d{3}-\d{2}-\d{4}$

- name: Regex Pattern Validation_2

type: regex_pattern

configuration:

pattern: ^\(?\d{3}\)?[-.\s]?\d{3}[-.\s]?\d{4}$

custom_variables:

unique_name: dataqualityguardian_5386

tools: {}

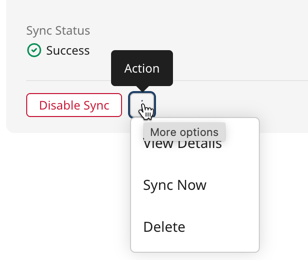

- Open the agent and select Deploy Agent.

- Navigate to Agent Control Tower > Manage Providers > Boomi Agent Garden.

- Click on the Account link.

- Select the Action icon > Sync Now. Syncing takes several minutes to complete. You can refresh to view the current status. A Success status indicates that the sync is complete.

Step 2: Set up the Boomi DataHub connector query operation

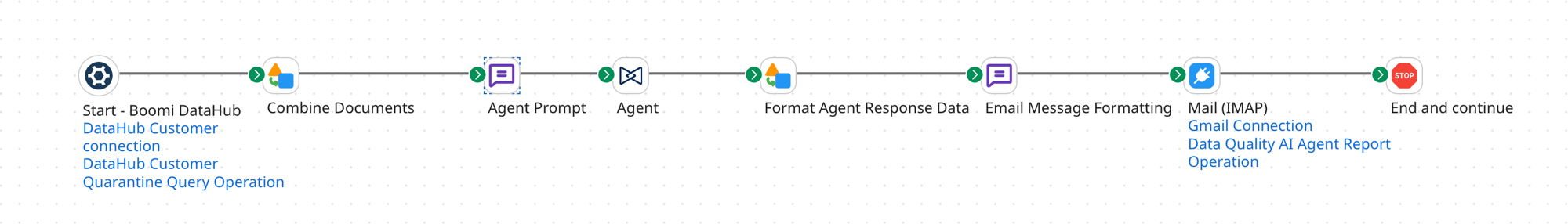

- Navigate to Integration > Create New Component and create a new process. You can name the process Data Quality Report.

- In the Start step, select the Boomi DataHub connector.

- Set up or attach a connection to the DataHub repository that contains the quarantined data. Refer to Creating a Boomi DataHub connection.

- Select the Query Quarantine Entries action. Refer to Query Quarantine Entries operation.

- Select the plus icon to create a new operation.

- Select Import Operation.

- Select the runtime. Your runtime must be online and attached to an environment. Refer to Adding a runtime cloud, Adding or editing environments, and Basic runtimes within an environment.

- Select Next.

- Select the deployed model that has quarantined entities.

- Select Next.

- Click Finish.

Step 3: Combine quarantine entities

- Add a Data Process step to the Build canvas.

- Add a processing step.

- In the Processing Step drop-down, select Combine Documents.

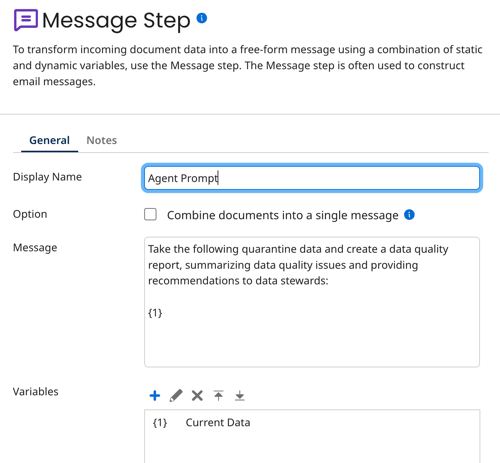

Step 4: Add an AI agent prompt

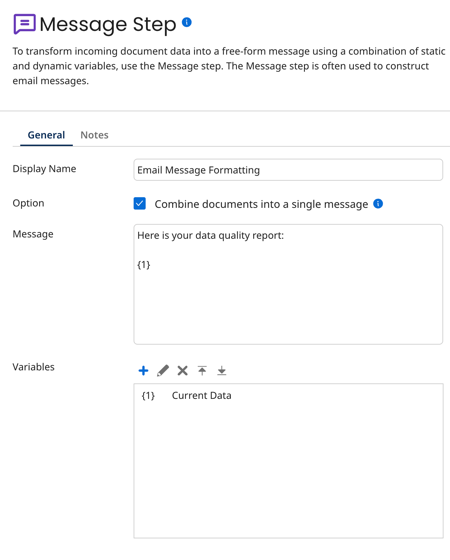

- Add a Message step to the Build canvas.

- In the Message field, enter the prompt for the AI agent.

Take the following quarantine data and create a data quality report, summarizing data quality issues and providing recommendations to data stewards: - In Variables, add a variable and select Current Data in the Type drop-down.

- Click OK.

- Save your process.

Step 5: Add the Agent step

- In Platform > Settings > Platform API Tokens, create an API token. Copy and save the token for later use in step 8.

- In your process in Integration, add an Agent step to the Build canvas. Agent step is available in all editions of Agentstudio. Contact your account representative if you have questions.

- Select Configure. Deployed agents that are synced in Agent Control Tower display.

- Select your data quality agent (DataQuality Guardian if you imported the agent).

- Select Generate Configuration.

- Select the Authentication tab.

- Select Click to Set.

- Paste the API token and click Apply.

Step 6: Extract the agent response and format it

- Add the Data Process step to the Build canvas.

- Add a processing step.

- Select Custom Scripting in the Processing Step drop-down.

- Select Javascript as the language.

- Add the following inline script that extracts the agent response from the message event and format it for email.

Inline script

// Get the first document

var inputStream = dataContext.getStream(0);

var props = dataContext.getProperties(0);

// Read the full stream into a string

var inputString = "";

var b;

while ((b = inputStream.read()) !== -1) {

inputString += String.fromCharCode(b);

}

// Split by double newline to get blocks

var blocks = inputString.split(/\r?\n\r?\n/);

// Markdown to plain text converter

function convertMarkdownToPlainText(text) {

// H1 (# Heading) → UPPERCASE + === underline

text = text.replace(/^# (.+)$/gm, function(_, title) {

var header = title.toUpperCase();

return header + "\n" + "=".repeat(header.length);

});

// H2 (## Heading) → Title + --- underline

text = text.replace(/^## (.+)$/gm, function(_, title) {

return title + "\n" + "-".repeat(title.length);

});

// H3 (### Heading) → Just text, no underline

text = text.replace(/^### (.+)$/gm, function(_, title) {

return title;

});

// Bold **text** or __text__ → UPPERCASE

text = text.replace(/(\*\*|__)(.*?)\1/g, function(_, __, boldText) {

return boldText.toUpperCase();

});

// Bullet lists: - item → • item

text = text.replace(/^\s*-\s+/gm, "• ");

// Numbered lists: keep intact

return text;

}

// Process each block

for (var i = 0; i < blocks.length; i++) {

var block = blocks[i];

if (block.indexOf("event: message") !== -1 && block.indexOf("data: ") !== -1) {

var lines = block.split(/\r?\n/);

for (var j = 0; j < lines.length; j++) {

if (lines[j].indexOf("data: ") === 0) {

var jsonText = lines[j].substring(6).trim(); // strip "data: "

try {

var json = JSON.parse(jsonText);

if (json.content) {

var output = convertMarkdownToPlainText(json.content);

var outStream = new java.io.ByteArrayInputStream(output.getBytes("UTF-8"));

dataContext.storeStream(outStream, props);

}

} catch (e) {

// skip malformed JSON

}

}

}

}

}

// Return false to prevent original doc from continuing

false;

Step 7. Format the email

- Add a Message step to structure the email. You can add a welcome sentence, such as

Dear Data Steward, here is your report. - Add a variable and select Current Data.

- Add the variable to the message.

Step 8. Add the Email step

- Add the Mail or Mail (IMAP) connector to the Build canvas. In this example, we're using the IMAP step for Google Gmail.

- Add your email connection. In this example, we created a Gmail connection and an App Password in the Google account.

- Inbound and Outbound User Name: youremail@gmail.com

- Inbound and Outbound Password: App password generated in Google.

- Inbound and Outbound Authentication Type: Basic

- Outbound Host: smtp.gmail.com

- Outbound Port: 465

- Outbound Connection Security: StartTLS

- Inbound Host: imap.gmail.com

- Inbound Port: 993

- Inbound Connection Security: SSL/TLS

- Select SEND in the Action drop-down.

- Add an operation. In the From and To fields, enter your email address.

- Add an email subject. For example, Data Quality Report.

- Add a Stop step to the Build canvas.

Step 9: Test your process

Click Test to test your process. An email appears in your inbox.

You can schedule your process to run at regular intervals as needed. Refer to Process Schedules to learn more.

Here is a sample of the report output:

DATA QUALITY SUMMARY REPORT

===========================

1. Overview

-----------

Total Quarantine Entries: 6

Time Period: December 2023 - July 2025

Source Systems: SFDC, QB

2. Data Quality Issues Breakdown

--------------------------------

Issue Distribution:

• POSSIBLE_DUPLICATE: 33.3% (2 entries)

• MULTIPLE_MATCHES: 33.3% (2 entries)

• REQUIRED_FIELD: 16.7% (1 entry)

• PARSE_FAILURE: 16.7% (1 entry)

By Source System:

• SFDC: 4 entries (66.7%)

• QB: 2 entries (33.3%)

3. Risk Assessment

------------------

High Priority Issues:

1. Multiple Matches (SFDC)

• Risk: High

• Impact: Potential data inconsistency and incorrect record linkage

2. Parse Failures (QB)

• Risk: High

• Impact: Data integration failure and incomplete records

Medium Priority Issues:

1. Possible Duplicates (SFDC)

• Risk: Medium

• Impact: Data redundancy and storage inefficiency

Low Priority Issues:

1. Required Field Issues (QB)

• Risk: Low

• Impact: Incomplete matching process

4. Recommendations for Data Stewards

------------------------------------

Immediate Actions:

1. For Multiple Matches (SFDC):

• Review and manually resolve the conflicting golden record matches

• Implement stronger matching criteria for future records

• Consider implementing a tie-breaker logic

2. For Parse Failures (QB):

• Update the data schema validation for QB integration

• Ensure all required fields (id, first_name, last_name, etc.) are properly mapped

• Implement pre-validation checks before data transmission

Short-term Improvements:

1. For Duplicate Management:

• Review and enhance duplicate detection rules

• Implement pre-emptive duplicate checking at data entry

• Consider implementing fuzzy matching algorithms

2. For Required Fields:

• Update QB data extraction process to ensure all required fields are populated

• Implement data completeness checks before transmission

Preventive Measures:

1. Implement real-time data validation at source

2. Establish regular data quality monitoring

3. Create automated alerts for recurring issues

4. Document and maintain clear data quality standards

5. Monitoring Recommendations

-----------------------------

• Implement daily monitoring of quarantine entries

• Set up alerts for sudden increases in specific error types

• Create weekly data quality dashboards

• Schedule monthly review of matching rules and criteria