Pricing

Data Integration uses a consumption-based pricing model, where usage is measured in credits called Data Integration Pricing Units (RPUs). These credits are also referred to as Boomi Data Units (BDUs).

Boomi Data Units (BDU) Credit

Boomi Data Units credit are the same as Data Integration Pricing Unit credits.

-

Database and file storage sources are charged only on the amount of data transferred down to the byte.

-

Most application (API) based sources are charged for each execution of a data pipeline.

-

Applications (APIs) with high-frequency replications can be charged on the amount of data transferred similar to databases.

The charge is based on the actual usage, not number of rows, letting you scale in a flexible and transparent way.

BDU credit usage calculation

Credit usage is based on the data source and pipeline type:

| Data Source / Pipeline Type | Credit Cost |

|---|---|

| Application (API) Based Sources | By default, you are charged 0.5 credits every time you ingest data from a single output table. If your source is configured as a High-frequency sync source (under the Pro Plus or Enterprise editions), you are charged 1 credit per 100MB of data transferred (pro-rata), regardless of execution frequency. |

| Database Replication and File Storage Sources | For standard database, files and webhook replication, you are charged 1 credit per 100MB of data transferred (pro-rata), regardless of execution frequency. For log based CDC database replication, you are charged 2 credits per 100MB of data transferred (pro-rata), regardless of execution frequency. |

| Orchestration and Advanced Workflows (Logic and Transformations) | You are charged 0.5 credits for every execution of an entire workflow. |

Here are some examples:

| Scenario | Credit Cost |

|---|---|

| Ingesting deal information from your CRM once every 24 hours. | 0.5 credit a day |

| Ingesting both, deal and contact information (each has a different output tables) from your CRM every 8 hours, but Monday-Friday. | 15 credits a week. 2 output tables * 3 times a day * 5 times a week * 0.5 BDU per execution = 15 |

| Running an orchestration workflow that pulls data from 5 different API sources, once a day. | 3 credits a day. 5 API pulls * 0.5 BDU per execution + 1 advanced workflow * 0.5 BDU per execution = 3 |

| You transfer 1,725MB of data per month between your Postgres database and your data warehouse. | 17.25 credits. 1,725MB / 100MB = 17.25 |

| You transfer 280MB of data per month from files on SFTP servers to your data warehouse. | 2.8 credits. 280MB / 100MB = 2.8 |

-

Simple Source to Target pipelines do not require setting up a workflow to execute.

-

Application (API) based source output tables are referring to different API calls required for the same source to pull different data entities. For example, if your data source is a CRM, there may be separate API output tables to pull both, deal information and contact data.

-

For application (API) based sources, pipelines that transfer more than 100MB of data per execution, one credit will be charged per 100MB of data. For example, 1 credit for an execution of up to 100MB of data transferred, 2 credits for an execution of 100MB - 200MB, and so on. If no data is detected in the execution, the charge will be 0.5 credits.

For databases

- If you subscribed before April 1st, 2025, refer to the legacy pricing for accurate information regarding your database plan.

- If you subscribed on or after April 1st, 2025, the current page contains the relevant details for your database plan.

Database and file storage source prices differ from API sources

Data replication from database and file sources consume less compute time. Therefore, costs less for Data Integration, so these savings are passed onto our customers.

Python BDU calculation

The BDU of the Python Logic step is calculated by adding the script's entire time and the quantity of network usage.

The Python Logic step BDU (logicode_bdu) will be charged regardless of the run status of the Logic Step.

The python pricing is based on:

- Execution time of the user’s Python script (seconds)

- Server size they chose to execute the script (see below)

- Network bandwidth - 0.4 BDU for every 100MB of data transferred

| Server Size | BDU per Minute | BDU per Hour |

|---|---|---|

| XS | 0.021 | 1.2 |

| S | 0.041 | 2.5 |

| M | 0.082 | 4.9 |

| L | 0.165 | 9.9 |

| XL | 0.329 | 19.7 |

| XXL | 0.3884 | 23.304 |

| XXXL | 0.492 | 29.52 |

Pricing editions table

| Account | Base | Professional | Pro Plus | Enterprise |

|---|---|---|---|---|

| Users | 2 | Unlimited | Unlimited | Unlimited |

| User Roles & Permissions | ✓ | ✓ | ✓ | ✓ |

| User Teams | ✓ | ✓ | ||

| Runtime Environments | 1 | 2 | 3 | Unlimited |

| Usage | Unlimited | Unlimited | Unlimited | Unlimited |

| Database Migrations | ✓ | ✓ | ✓ | ✓ |

| Connectors & Integrations | Base | Professional | Pro Plus | Enterprise |

|---|---|---|---|---|

| Data Sources (200+) | All | All | All | All |

| Targets | All | All | All | All |

| Multiple Targets | ✓ | ✓ | ✓ | ✓ |

| Kits (pre-built workflows) | ✓ | ✓ | ✓ | ✓ |

| Reverse ETL | ✓ | ✓ | ✓ | ✓ |

| Change Data Capture (CDC) | ✓ | ✓ | ✓ | ✓ |

| API High-frequency syncs | ✓ | ✓ | ||

| SQL Transformations | Unlimited | Unlimited | Unlimited | Unlimited |

| Python Transformations | Unlimited | Unlimited | Unlimited | |

| Max Sync Frequency | 60 min | 15 min | 15 min | 5 min |

| Extensibility | Base | Professional | Pro Plus | Enterprise |

|---|---|---|---|---|

| Build Your Own Data Source | ✓ | ✓ | ✓ | ✓ |

| Custom Targets / Actions | ✓ | ✓ | ✓ | ✓ |

| Webhooks / Events | ✓ | ✓ | ✓ | ✓ |

| Data Integration API Call Access | ✓ | ✓ | ✓ | |

| Command-Line Interface | ✓ | ✓ | ✓ |

| Orchestration | Base | Professional | Pro Plus | Enterprise |

|---|---|---|---|---|

| Advanced Scheduling | ✓ | ✓ | ✓ | |

| Execution Logic & Branching | ✓ | ✓ | ✓ | ✓ |

| Sub-Rivers | ✓ | ✓ | ✓ | ✓ |

| Pipeline Dependencies | ✓ | ✓ | ✓ | ✓ |

| Built-In Versioning | ✓ | ✓ | ✓ | ✓ |

| Execution Logs | ✓ | ✓ | ✓ | ✓ |

| Monitoring & Alerts | ✓ | ✓ | ✓ | ✓ |

| Security | Base | Professional | Pro Plus | Enterprise |

|---|---|---|---|---|

| SOC 2 (Type II) & HIPAA | ✓ | ✓ | ✓ | ✓ |

| Custom File Zone | ✓ | ✓ | ✓ | |

| SSH Tunnel | ✓ | ✓ | ✓ | ✓ |

| Reverse SSH Tunnel | ✓ | ✓ | ||

| AWS PrivateLink / Azure Private Link | ✓ | ✓ | ||

| VPN (for database integration) | ✓ | |||

| Single Sign-On (SSO) | ✓ | ✓ | ||

| Audit Log | ✓ | ✓ |

BDU usage

There are two areas where you can get information about your BDU consumption:

- Dashboard

- Activities

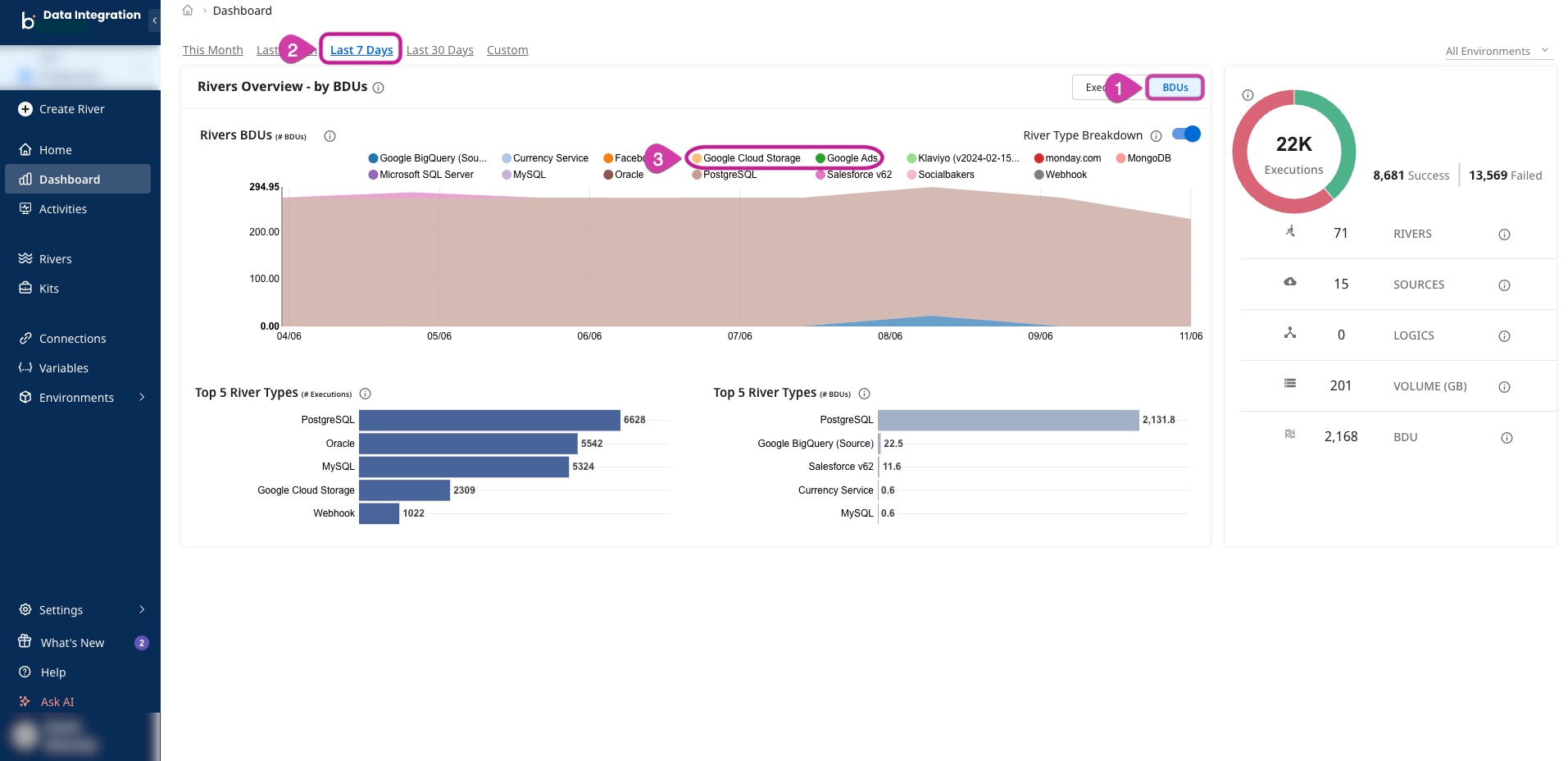

Dashboard

Click the Dashboard tab from the main menu, a graph of your entire activity appears.

Procedure

-

Navigate to Data Integration Console.

-

Click the Dashboard tab from the main menu.

-

Click the BDUs tab.

-

Select the desired timeframe in the top left-corner of the page.

-

Select one or more sources under Rivers BDUs, to examine BDU consumption for these specific Rivers.

-

The total amount of BDU for this timeframe is indicated in the bottom-right corner.

Activities

The Activities tab shows the entire usage amount of BDU.

Procedure

- Navigate to Data Integration Console.

- Click the Activities tab from the main menu.

- Find your River.

- You can view the entire amount of BDU on the right.

Python BDU usage

The Logic steps icon can only be found in a Logic River that uses Python.

Procedure

- Navigate to Data Integration Console.

- In the Activities tab, click the row that highlights the River.

- Click Download Logs.

- Search for logicode_bdu_per_step to locate the Python BDU calculated in this run.

Free Trial features and limits

The Data Integration free trial includes access to all of the professional plan features, for 14 days or 1,000 free credits (worth $1,200) of usage, whichever expires first.

When your free trial ends, you can continue using Data Integration by registering for any on-demand plan to explore our annual and Enterprise plans.

To upgrade your plan, refer to Subscription & Billing.

- Data Integration does not charge per connector and there is no minimum or maximum on the number of connectors you can use. You get the best single source to efficiently align your data from internal databases and third-party platforms.

- Data Integration does not charge per user.

- The Base edition is limited to 2 users.

- All other editions includes unlimited users.

- Data Integration does not charge per environment, although each plan has a maximum number of environments:

- The Starter plan is limited to 1 environment.

- The Professional plan is limited to 3 environments.

- The Enterprise plan includes unlimited environments.

- There is no limit to the number of API integrations an account can have.

- All our data sources are available on all plans.

- You have multiple options for connecting to practically any source, in addition to Data Integration managed sources. For more information, refer to the Custom Data Integration.