Using reverse ETL

Data Integration is an E-L-T platform as opposed to E-T-L. Source connectors load raw data into destination data lakes or warehouses, and Logic rivers enable full ELT orchestration and push down transformations in destinations.

If you want to push data from your data warehouse to other systems, this uses the data warehouse as a source and an API endpoint as the target. Instead of ‘ELT’, think of it as ‘TEL’. The data warehouse feature transforms data into the format needed to send data to the desired destination API, extracts it, and loads it to that endpoint.

Data Integration requirements

To perform Reverse ETL in Data Integration, you need:

- A data warehouse target (not supported for data storage targets)

- The data destination is a REST API endpoint

It is recommended to have a general understanding of the destination API structure and behavior, as you leverage Data Integration’s Action River feature to pass data from a data warehouse table through the corresponding inputs of a REST API endpoint

Consider, you want to update custom HubSpot deal properties based on specific events in your data warehouse (For example, Snowflake). You are tracking free trial account activity, and your Sales team needs visibility into how those accounts use your product. You can automate sending key product usage data from your warehouse to HubSpot by updating custom deal properties.

At Data Integration, tracking free trial account usage is important. To help Sales teams understand trial account activity, three custom product-usage properties should be sent to HubSpot:

- Number of Rivers

- Number of runs

- Number of distinct Sources

Consider that you have this data loaded to our data warehouse in a table in Snowflake called RIVER_EXECUTIONS.

Step 1: Define your dataset to PUSH (the T of “T-E-L”)

You must create a logical step that only returns the data you want to push back to HubSpot. In this example, you must calculate the above measures only for active trial accounts in our system.

A HubSpot deal is auto-generated when creating a new trial account and includes the Data Integration account ID as a custom property by default.

select

deals.deal_id,

runs.rivery_account_id,

runs.rivery_num_of_rivers,

runs.rivery_executions,

runs.rivery_data_sources

from "INTERNAL"."ODS"."HUBSPOT_DEALS" deals

inner join (

select

rivery_account_id,

count(distinct river_id) as rivery_num_of_rivers,

count(distinct run_id) as rivery_executions,

count(distinct datasource_type) as rivery_data_sources

from INTERNAL.ODS.RIVER_EXECUTIONS

Where account_type = ‘trial’

group by rivery_account_id

) runs

on deals.rivery_account_id = runs.rivery_account_id

where deals.isdeleted = FALSE;

Creating three different metrics grouped at the account level for trial accounts. = Joining this to our existing deal pipeline.

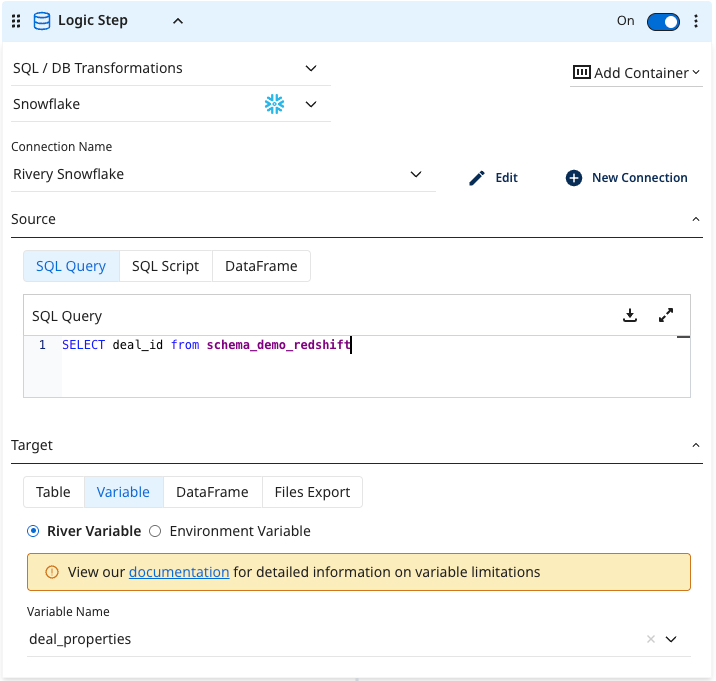

For the Data Integration part. Create a new Logic River and use the SQL/Script type of Logic Step. Choose your target type and connection, and input your source query.

For the Target, define a table in your data warehouse to store these results. This table will be used in the next step. In our example, let’s call this TRIAL_DEALS_PROPERTIES.

Step 2: Match your data format to the expectations of your destination API (more ‘T’)

In this case, you can use the HubSpot endpoint for updating a deal. In each call to this API endpoint, you need a dealid parameter and a request body populated with a properties object listing the properties to update in the deal.

An example request body:

{

"rivery_data_sources": 3,

"rivery_executions": 591,

"rivery_num_of_rivers": 39

}

Using Snowflake’s JSON-building functions, such as OBJECT_CONSTRUCT(), you can create the above object for each deal_id. This lets you pass a deal_id and its corresponding properties into an Action River to make each update call.

An example query using OBJECT_CONSTRUCT() function to produce desired results:

select

deal_id,

object_construct('rivery_num_of_rivers', rivery_num_of_rivers,

'rivery_executions', rivery_executions,

'rivery_data_sources',rivery_data_sources

) as property_values

from "INTERNAL"."AUTOMATION"."TRIAL_DEALS_PROPERTIES"

group by

deal_id,

rivery_num_of_rivers,

rivery_executions,

rivery_data_sources

;

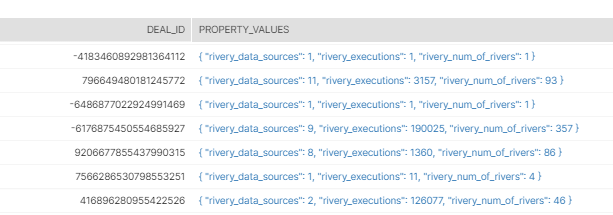

Example Results:

Now, for the next Data Integration part. You can add another logic step using the query above as the source query. However, instead of setting the Target value as a table, you can store our results in a Variable.

This stores the results of the source query in the step into a river variable, which can be used in future logic steps in this river.

Save this river so you don't lose your changes.

Step 3. Create the API call using an action

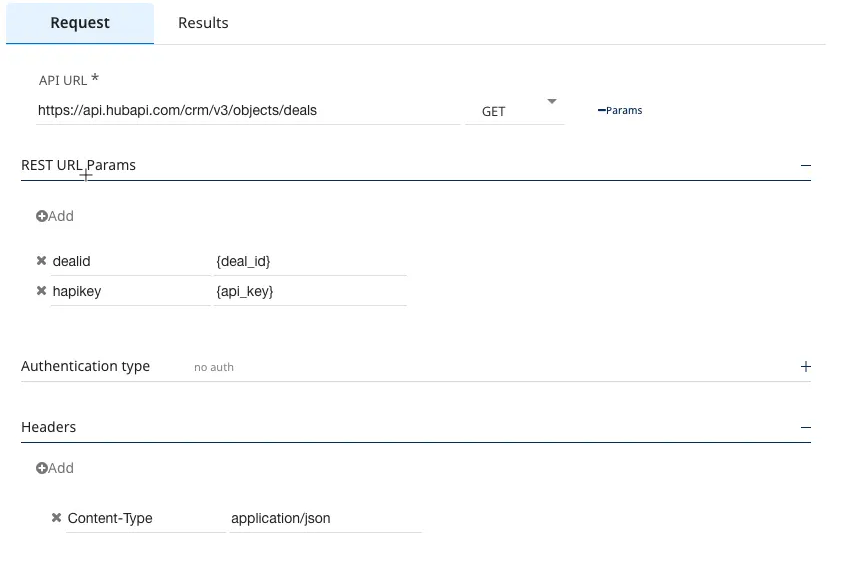

Using the Hubspot documentation to update deal endpoint, you can fill out the REST template provided in a Data Integration Action. (You created a new river so that you can use it in our existing Logic River.)

In the request body, define a variable called properties. After adding the Action as a step in the Logic River, this body contains the properties to update for each call.

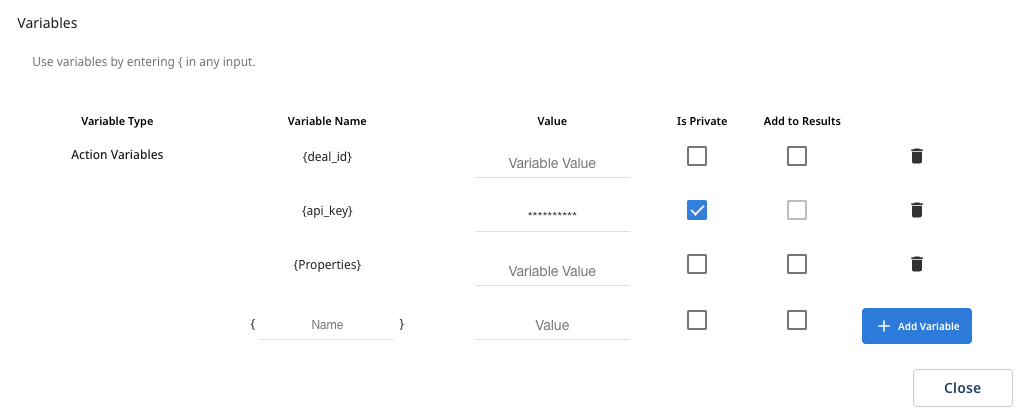

In Data Integration, you must reference variables using the curly brackets. Define as variables.

Step 4: Connect the dots

You must add the Action created in steps 1 and 2 to the Logic River, which connects the whole process.

In the Logic River, add a third step, use the Action logic step type, and select the Action River created in Step 3. In this example, ‘PATCH - HubSpot Deal Update’.

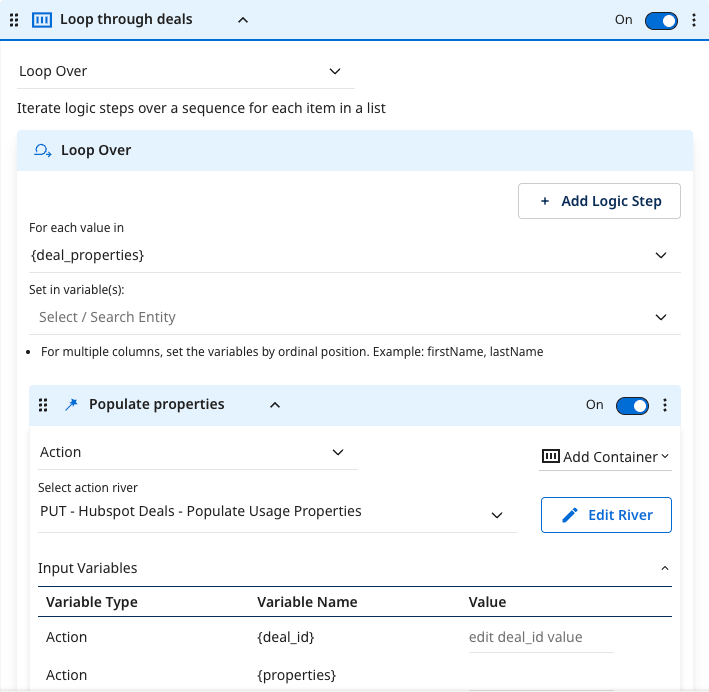

Next, click Container Me on the Action Step to wrap this step in a container. Change the container type to Loop Over from the drop-down on the top left. In this example, send one request per deal_id, so you must loop over Action step in case of multiple deals.

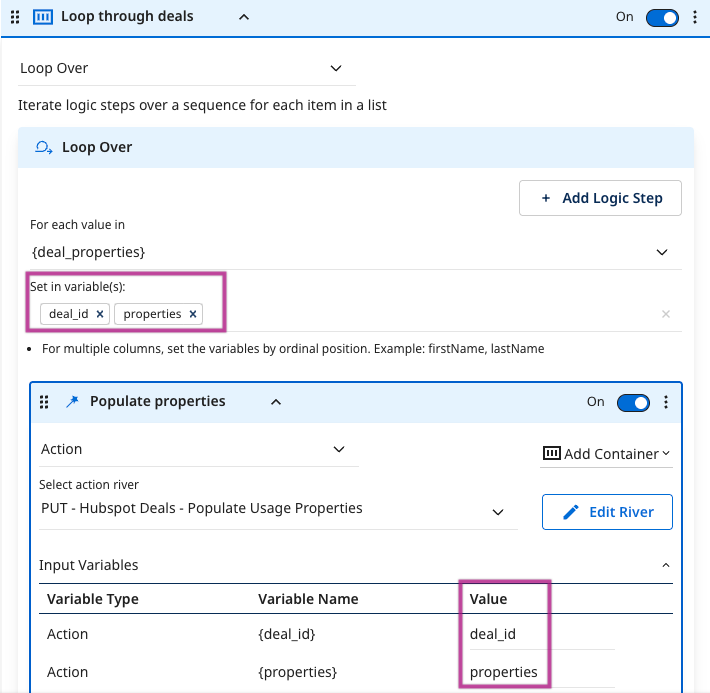

In the for each value in drop-down menu, select the river variable created by the second logic step (called **deal_properties**).

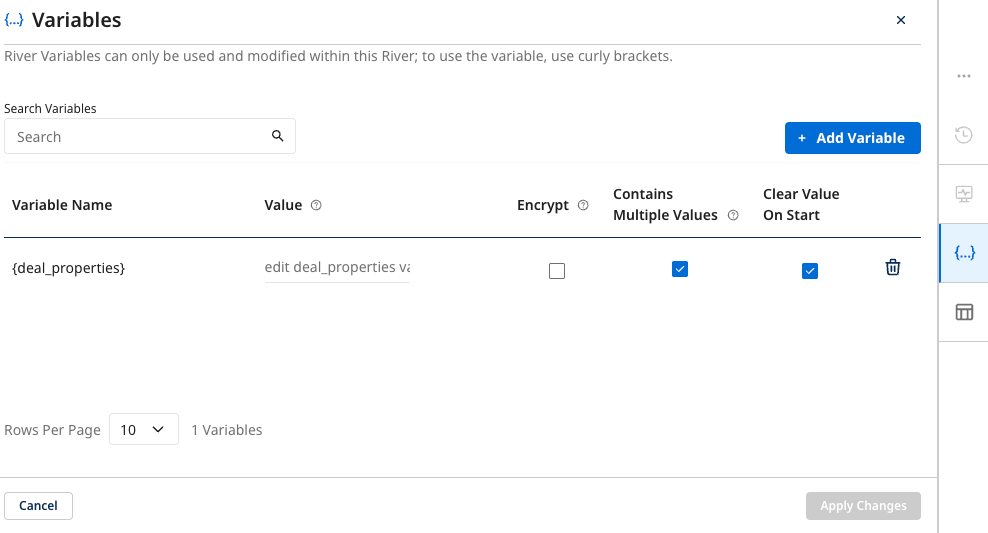

In the Variables menu in the Logic River, ensure to set this variable to accept multiple values.

In the second window, create two iterators, one for the deal_id parameter and one for the properties.

SELECT statement that defined the variable in the previous logic step.

Set the Input Variables in the Action step to match the iterators created. This key connects the data values stored in the river variable to those defined in the API request.

Test your process and Run the river. Contact Data Integration support by clicking the 'Support' icon in the console for assistance.