Configuring Amazon Redshift as a target

You can set up your Amazon Redshift cluster by acquiring the essentials needed to connect with Data Integration.

Prerequisites

Ensure to meet the following prerequisites:

-

Select a source

Setting up a target requires selecting a Source and creating a connection to it.

-

Amazon Redshift connection

Ensure that you have established a connection with Amazon Redshift as your Target.

Procedure

- Click the curved arrow next to Schema on the right side of the row. After the refresh, click the row and choose the Schema where the data will be stored.

- Enter the Table Name.

- Select a Distribution Method (This option is available only when you select Custom Report, or Custom Query as the River Mode):

-

All - Involves distributing a complete copy of the table to every node in the cluster. Best suited for tables that are rarely updated or are static (slow-moving). It is not beneficial for small tables, as the cost of redistribution during queries is low.

-

Even - Involves the leader node distributing data rows across slices in a round-robin manner, without considering the values in any specific column. This method is ideal for tables that are not involved in joins. It is also a suitable choice when neither KEY nor ALL distribution methods are advantageous.

-

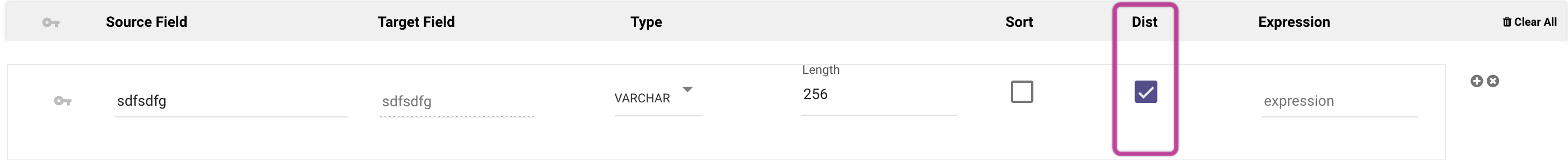

Key - Rows are allocated based on the values in a designated column. The leader node places rows with matching values in the same node slice. This is effective when distributing a pair of tables on their joining keys. This ensures that rows with matching values from the common columns are stored together physically, facilitating efficient join operations.

When using the Key Distribution Method, you must choose a single key column to perform the slicing.

-

When using Multi-Tables or Predefined Reports as your River Mode, you can choose a "Distribution Method" in the Table Settings, which is accessible by clicking on a particular table in the Schema tab.

- Set the Loading mode.

Amazon Redshift offers the flexibility to choose different Merge methods. For more information about these options, refer to the Amazon Redshift Upsert-Merge Loading Mode Options documentation.

- In the Additional Options menu, the following options are available:

- Truncate Columns - is designed to handle instances where the length of an array exceeds the maximum VARCHAR length permitted in Redshift. Since Redshift's array type has limited flexibility and can change between different data loads, Data Integration ensures compatibility by converting arrays into VARCHAR(max) type. However, if an array surpasses the maximum VARCHAR length, the Truncate Columns option is available under Advanced Options in the Target tab, which can be used to truncate the array data to fit within Redshift's maximum size constraints.

- Compression Update - updates the column compression in the target table during data loading. This action occurs only if the target table has not been created.

- Keep Schema-binding Views - ensures that all schema-binding views remain intact when employing upsert-merge or overwrite methods. If this option is not selected, any schema-binding views that depend on the target table will be dropped.

- Add Data Integration Metadata - designed to enhance the Target table by automatically including three columns: last_update, river_id, and run_id. This feature also lets you incorporate additional metadata fields using expressions.

When the Source is in Multi Table mode, this option becomes available.

- If you have configured a Custom File Zone, select a "Bucket" and specify a path where your data will be stored. Additionally, establish a time frame for period partitioning within a FileZone folder.

You can enable Data Integration to divide the data according to the data insertion day, the Day/Hour, or the Day/Hour/Minute. Data Integration produces data files from your sources under folders that correspond to the correct partition you've selected.

- Any "Source to target River" can send data to your Amazon Redshift Bucket.