Databases overview

Data Integration enables migrating data from legacy and cloud databases to one of the supported Data Warehouses.

Our database connectors are built with correctness, safety, and security. Your destination is always an exact copy of your database source. Data Integration never causes difficulties in your source database, and clients can use a custom file zone to ensure that data does not leave their environment during transit.

You can use simple methods to obtain data from databases without needing access to Binary Logs, in addition to connecting with Binary Log capture tools.

Compatible configurations

The database services listed are fully compatible with cloud platforms, including Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. These services can seamlessly integrate into cloud environments to support diverse data workflows and enterprise use cases.

Supported database services:

Compatibility of versions

| Database | Modes | Versions Supported | Support for Managing Schema Drift |

|---|---|---|---|

| ElasticSearch | Standard Extraction | Scroll API Version 7.10 Indexes API Version 7.10 Mapping API Version 7.10 | ❌ |

| MariaDB | Standard Extraction System Versioning Custom Query | Versions 10.0 and Above | ✅ |

| Microsoft SQL Server | Standard Extraction Change Data Capture Change Tracking | All Versions Sp1 2016 and Above (Standard or Enterprise Editions) All Versions | ✅ |

| MongoDB | Standard Extraction Change Streams | Versions 3.4 and Above Versions 4.0 and Above | ✅ |

| MySQL | Standard Extraction Change Data Capture | All Versions MySQL 5.6 and Above, or MySQL 8.0 and Above | ✅ |

| Oracle | Standard Extraction Change Data Capture | Versions 11g and Above | ✅ |

| PostgreSQL | Standard Extraction Write Ahead Logging (WAL) | All Versions Versions 10 and Above | ✅ |

| Redshift | Standard Extraction | All Versions | ✅ |

| Teradata | Standard Extraction | Version 15.10 | ✅ |

| Vertica | Standard Extraction | All Versions | ✅ |

Supported features

Parquet file conversion

You can convert CSV/JSON files to Parquet files using Amazon S3 as the Target. To learn more, refer to Converting a CSV file to Parquet topic. This topic uses Amazon S3 as a Source, you can use any Database and Google Cloud Storage.

Incremental behavior

Data Integration works in millisecond increments, but you can store data in more granular microsecond or nanosecond increments. When operating incremental runs based on timestamp, it may appear that the most recent record is missing.

Data Integration reads three numbers after the dot when there are six, so '2022-08-14 10:26:52.895132 ' becomes '2022-08-14 10:26:52.895 ' and the last record is missing from the current run when fetching the data.

This record will be retrieved in the next run with updated data.

Changing Data Capture (CDC) metadata fields

CDC is a feature available in database management systems, letting the detection and recording of changes made to data within a database. There are 4 metadata fields commonly used to provide information about the changes made to records.

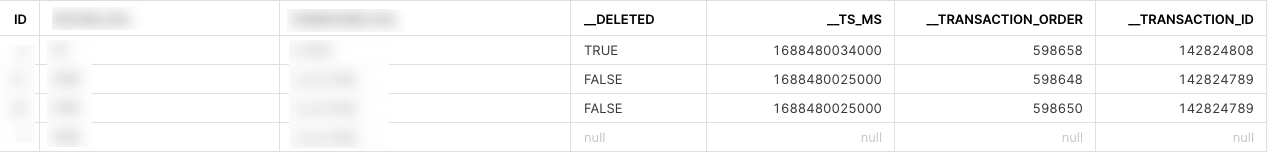

This section details the automatically appended fields (columns) to the table when employing CDC, including:

- __DELETED

- __TS_MS

- __TRANSACTION_ORDER

- __TRANSACTION_ID

__DELETED

The "__DELETED" column indicates rows that are removed from the Source database. In cases of modification, the status is clarified as follows:

- True: indicates that the actual row was deleted from the Source database.

- False: indicates that the row still exists in the Target warehouse but has been updated.

- Null: Indicates that the row remains unchanged in the Target warehouse without any updates.

When a row is deleted from the Source database, it is categorized as a "Hard Delete"(the deletion is irreversible). The CDC process records this event in the logs table, and the corresponding record appears as a "Soft Delete" in the Target warehouse. This signifies that the most recent version of the row still exists in your Target warehouse. The "Soft Delete" approach in database table management maintains a record of the deleted data, providing the possibility of data recovery if needed, as the deleted rows persist in the Target warehouse.

A "Soft Delete" retains the record in the table without physically removing it, leading to its continued presence in your Target warehouse and inclusion in backups unless additional steps are taken to exclude it.

__TS_MS

The TS_MS column, short for the TIMESTAMP Milliseconds column, is designed to convey integer values or null entries. Depending on the circumstance, the timestamps within this column are displayed in integer or null. This column serves the purpose of documenting the timing of changes when employing the CDC method.

___TRANSACTION_ORDER

This column indicates the sequence in which the transaction was emitted from the database. It derives its significance from the transaction order within the database log or replication stream. Ensuring that __Transaction_Order is unique for each transaction and accurately mirrors the actual commit order is crucial.

___TRANSACTION_ID

This serves as a distinct identifier for each transaction generated by the database. The nature of this identifier depends on the database system in use and may be derived from factors such as log position, GTID, or LSN. For instance, in MySQL, the __Transaction_ID field might be assigned the GTID of the transaction, while in PostgreSQL, it could be configured to reflect the LSN.

Here is an example:

Add a calculated column

One of the key features of Data Integration is the ability to add calculated columns using expressions. This powerful functionality allows the user to customize their output table beyond the limits of the raw metadata extracted from the Source by including expressions, mathematical or string operations, or simple functions to enrich and tailor the output to their use-case.

For more detailed information, refer to Targets document.

Limitations of Kafka in Change Data Capture (CDC)

Our CDC implementation uses Kafka for file screening, and it comes with a limitation:

Topics (representing tables and columns) in Kafka cannot be named using characters like underscores (_) or commas (,).

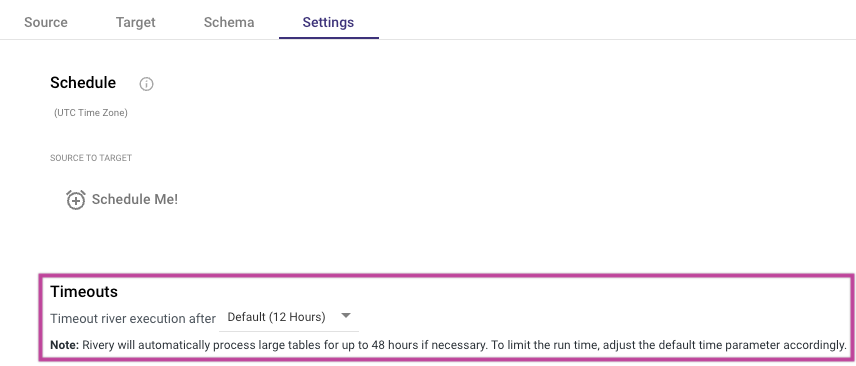

Extended execution time for large tables

Data Integration provides an automatic extension of execution time for processing large datasets, ensuring efficient data loading without manual adjustments. This feature enables Rivers to handle extensive data loads by extending the processing time automatically for up to 48 hours, as needed, to ensure successful completion of data operations.

Dynamic adjustment mechanism

Data Integration dynamically adjusts the execution time based on table size and row count. When large tables are detected, Data Integration automatically activates a long-duration mode, allowing the data processing to continue without user intervention.

Conditions for extended execution time activation

The extended execution time feature is automatically enabled when the following conditions are met:

- Table size: The dataset’s table size is greater than or equal to 100 GB.

- Row count: The dataset contains 50 million or more rows.

- Extraction method: The extraction method selected is “All.”

- River mode: The River is configured in “Multi-tables” mode.

User control and customization

Users can customize the execution timeout limit in the River’s settings tab to suit specific requirements. By setting a custom timeout, you define the maximum duration allowed for execution. If the custom timeout is reached, the process will terminate according to the set limit, providing control over resource management and operation duration.

Data Integration will automatically process large tables and reports for up to 48 hours, unless the user modifies the default timeout setting.

Factors influencing storage efficiency and performance in databases

The size and storage efficiency of both tables in a database or data source is influenced by factors such as data types, compression, indexes, the number of records or tables, and the configuration of storage, including storage zones. Optimizing these factors is crucial for efficient storage, improved performance, and scalability in a database environment.

In addition to tables, storage in a database is also influenced by similar factors:

- Data types: Different data types stored in tables occupy varying amounts of storage space.

- Table size: **The size of individual tables depends on the amount of data stored within them.

- Number of tables: More tables stored in the database lead to increased storage requirements.

- Compression: Compression techniques applied to tables can reduce their storage size.

- Indexes: Similar to tables, indexes created on tables for efficient data retrieval also add to storage requirements.

- Storage configuration: The configuration of storage, including factors like block size, file system type, and storage zones, impacts storage efficiency, performance, and scalability.

Connection

Click the sections below for details on how to connect to our supported databases:

To view the connectivity options for our databases, consult the connectivity option documentation.

Mapping error message

If you encounter the following message: ActionScriptActionScript The schemas tables columns: test_Ñ, משתמשים, خش, C_TAMAÑO_PASTEL and 2 more contains invalid chars, or does not exists, and can not be pulled.View Logs It indicates that invalid characters are present in the specified tables or columns. As a result, these tables or columns will not be retrieved and will not appear in the mapping.