SQL server CDC walkthrough

Change data capture extraction

SQL Server Change Data Capture (CDC) simplifies the extract, transform, and load processes. It also records data changes such as inserts, deletes, and updates, but it provides more details than SQL Server Change Tracking. The mechanism for capturing and the information captured are distinct.

The information is retrieved in "Change Data Capture" by querying the online transaction log on a regular basis. The procedure is asynchronous. Database performance is unaffected, and performance overhead is lower than with other solutions (for example, using triggers).

SQL Server CDC requires no schema changes to existing tables, no columns for timestamps are added to the tracked (source) tables, and no triggers are created. It collects data and stores it in tables known as change tables.

When you apply CDC to a table, SQL Server automatically creates a second table to track all DML (inserts, updates, and deletes) to the table. SQL Server internally tracks all DML to the base table by reading committed transactions from the transaction log, similar to how replication is implemented. This log reading occurs in the background and adds no additional work to the originating transaction.

- A CDC table must have a Primary Key to be tracked by the CDC mechanism in SQL Server, as required by SQL Server.

- Data Integration does not support the

@index_nameoption.

CDC point in time position feature

The CDC Point in Time position feature lets you gain deeper insights into the operational details of a River's streaming process. This feature is essential for data recovery and synchronization, enabling you to locate and retrieve data from a specific point in history using the exact information stored in the CDC log position. For more information, refer to our documentation.

A 'Sequence' CDC deployment

Discrepancies in transaction records can arise when two users simultaneously execute identical transactions, causing conflicts in the timestamp field. Data Integration implemented a "sequence" Change Data Capture (CDC) mechanism to tackle this issue.

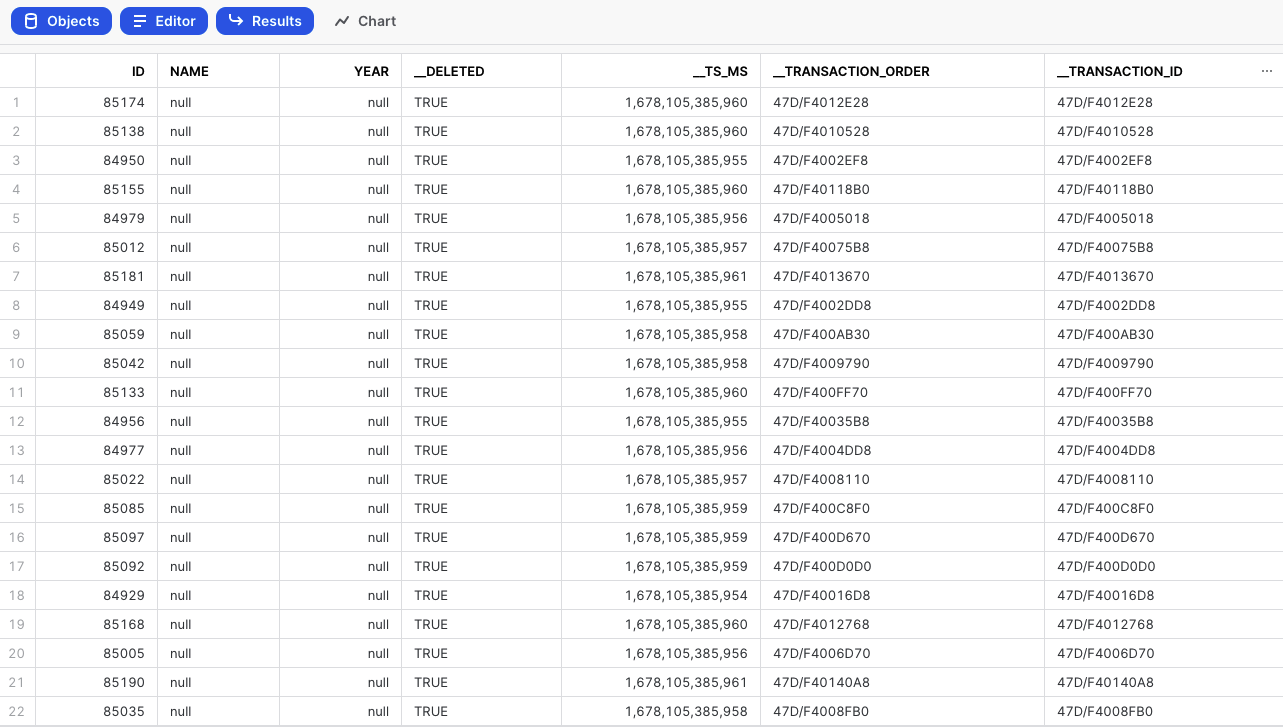

Data Integration enhances each emitted record from the database by incorporating two extra metadata fields: __transaction_id and __transaction_order.

-

The __transaction_id field serves as a unique identifier for each transaction, ensuring that no two transactions share the same identifier. This uniqueness provides precise identification and differentiation between transactions, mitigating conflicts arising from identical timestamps.

-

The __transaction_order field denotes the order in which the transactions were emitted from the database. By incorporating this field, the sequencing of transactions can be accurately maintained, enabling downstream systems such as Apache Kafka or AWS Kinesis to process and order transactions correctly.

The inclusion of these metadata fields guarantees that the ordering of transactions is preserved throughout the River. As a result, smooth and accurate transaction flows can be achieved, resolving the discrepancies that previously arose from transactions with identical timestamps.

The additional fields are depicted in this table:

For further details about Change Data Capture (CDC) Metadata Fields, refer to Database Overview document.

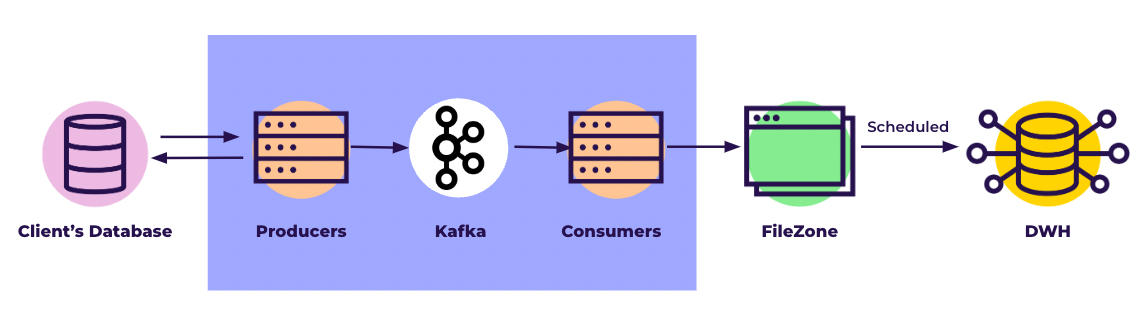

Architecture diagram

- Producers - The process of reading the changes made to the source database.

- Kafka - Streaming service to ensure that changes are delivered securely and credibly.

- Consumers - The process of reading the Kafka Stream and saving the changes as files.

- FileZone - The storage that holds the files that will be loaded into the target data store.

- DWH - The target Data Warehouse into which the changes will be loaded.